This series shows you the various ways you can use Python within Snowflake.

Snowpark for Python is the name for the new Python functionality integration that Snowflake has recently developed. At the Snowflake Summit in June 2022, Snowpark for Python was officially released into Public Preview, which means anybody is able to get started using Python in their own Snowflake environment.

I would understand if you wanted to skip this post and cut directly to the Snowflake’s monolithic documentation page; however, I would strongly recommend reading through this post first, along with my other definitive guides for Python and Snowflake, as these posts will give you an introduction to both the possibilities and limitations of this new functionality whilst walking you through how to get going yourself. In this post, we will walk through the steps you can take to create your own Python User Defined Function, or Python UDF for short, by connecting to Snowflake as part of an external Python script using the Snowpark for Python package.

If you are not interested in connecting to Snowflake and instead wish to simply dive into creating Python UDFs and Stored Procedures from within the Snowflake User Interface, then I would recommend these posts instead:

- A Definitive Guide to Python UDFs in the Snowflake UI

- A Definitive Guide to Python Stored Procedures in the Snowflake UI

If you are interested in finding out about Python Stored Procedures instead of UDFs, be sure to check out my matching “Definitive Guide to Creating Python Stored Procedures in Snowflake using Snowpark,” which is coming soon!

Why Define and Leverage a Python UDF Through Snowpark Instead of the Snowflake UI?

As is made clear in my previous blog posts, you can create Python UDFs directly within the Snowflake UI instead of connecting with Snowpark. However, there are a few benefits to using Snowpark instead:

- Use your IDE of choice to develop your Python functions instead of relying on the Snowflake UI, especially when facilitating access to users who may not be familiar with Snowflake but are familiar with Python

- More freedom to leverage Git and other tools to maintain and deploy Python functions, especially if the same functions are used elsewhere in your technology stack

- Directly create and leverage Python functions from within wider Python scripts outside of Snowflake whilst still pushing the compute down to Snowflake when calling functions

Warning Regarding Staged Files and Libraries

It is important to note at this time that UDFs do not have access to the “outside world.” This is a security restriction put in place by Snowflake intentionally. If you wish to create a UDF that accesses the outside world, for example to hit an API using the requests library, then it is recommended to use external functions instead.

Requirements

This post focuses on leveraging Snowflake Snowpark Sessions from the Snowpark for Python package to connect to Snowflake and create Python UDFs. If you haven’t already, I would recommend reading my Definitive Guide to Snowflake Sessions with Snowpark for Python as this will give you a strong introduction into setting up a Snowpark environment to connect to Snowflake and provide you with a few shortcuts in the form of code snippets.

Throughout this blog post, I will always use the variable snowpark_session to refer to a Snowflake Snowpark Session that we have established using the above-mentioned post.

How to Create Your Own Python UDF from a Snowflake Snowpark Session

Snowflake have integrated the ability to create Python UDFs directly into the standard commands that can be executed for a Snowflake Snowpark Session object. Here is a sample Python code to demonstrate how to create your own UDF using Snowpark.

This could easily be overwhelming at this stage so we will follow this template’s description with some examples, starting off simple and getting more complex as we go.

This template includes lines regarding optional packages and imports that will be discussed after our simple examples

# Define Python function locally

def <name of main Python function>(<arguments>):

# Python code to determine the main

# functionality of the UDF.

# This ends with a return clause:

return <function output>

# Import data type for returned value

from snowflake.snowpark.types import <specific Snowpark DataType object>

# Optional: Import additional data types

from snowflake.snowpark.types import <specific Snowpark DataType object>

# Optional: Import additional packages or files

snowpark_session.add_packages('<list of required packages natively available in Snowflake (i.e. included in Anaconda Snowpark channel)>')

snowpark_session.add_import('<path\\to\\local\\directory\\or\\file>')

# Upload UDF to Snowflake

snowpark_session.udf.register(

func = <name of main Python function>

, return_type = <specific Snowpark DataType object for returned value>

, input_types = <list of input DataType() objects for input parameters>

, is_permanent = True

, name = '<UDF name>'

, replace = True

, stage_location = '@<UDF stage name>'

)

The main elements to understand here are:

- On rows 2-6, we define the main Python function that will be leveraged by our UDF. This function can leverage other functions that you have defined in your script or imported from elsewhere; however, the UDF can only be assigned a single main Python function.

- On rows 19-27, we leverage the

udf.register()method of the Snowflake Snowpark Session object to create a new UDF in Snowflake. - On row 20, we determine the Python function that will be leveraged by our UDF. The name here would match the function name on row 2.

- On row 24, we determine the name of the UDF within Snowflake. You can pass a fully qualified name here if you prefer; otherwise, the UDF will be created in the same namespace as your Snowflake Snowpark Session object.

- On row 26, we determine the name of the Snowflake stage to which the files for our UDF will be uploaded.

- On row 9, we import the specific Snowpark DataType object that will be used for the value returned by the UDF. This is discussed more in a note below.

- On row 21, we determine the specific Snowpark DataType object that will be used for the value returned by the UDF. This would match that which we imported on row 9.

- On row 10, we have an optional import for any additional specific Snowpark DataType objects that will be used for the inputs to our UDF. If required, there may be more than one additional line here.

- On row 22, we determine the list of specific Snowpark DataType object that will be used for the input arguments for the UDF. All of these must be included in the imports on rows 9 and 12.

- On rows 15 and 16, we provide optional rows to add additional packages and imports to our UDF. This will be discussed after our simple examples.

- On row 23, we determine that the UDF we create will not be temporary. A temporary UDF will only exist within our specific Snowflake Snowpark Session object.

- On row 25, we determine whether or not to overwrite an existing UDF with the same name. If this is set to

Falseand a UDF already exists, an error will be returned.

Snowpark DataType Objects

Snowpark must leverage compatible data types for inputs and returned value when creating both UDFs and Stored Procedures. You can find the full list of types in Snowflake’s documentation. The examples provided in this blog leverage several different data types, which should give you a reasonable idea for how to configure your own use-cases.

Simple Examples

Let’s break this down and provide a few simple examples to explain what is happening more clearly.

Please note that these examples are deliberately minimalistic in their design and thus do not include any error handling or similar concepts. Best practice would be to prepare functions with error handling capabilities to prevent unnecessary execution and provide useful error outputs to the end user.

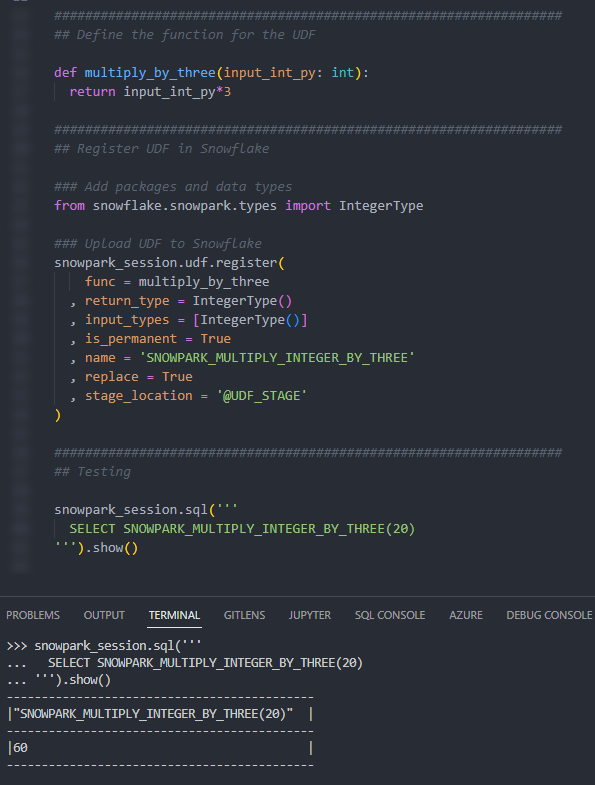

Multiply Input Integer by Three

Our first example is a very simple function that takes an integer and multiples it by three. First, let’s see the code:

##################################################################

## Define the function for the UDF

def multiply_by_three(input_int_py: int):

return input_int_py*3

##################################################################

## Register UDF in Snowflake

### Add packages and data types

from snowflake.snowpark.types import IntegerType

### Upload UDF to Snowflake

snowpark_session.udf.register(

func = multiply_by_three

, return_type = IntegerType()

, input_types = [IntegerType()]

, is_permanent = True

, name = 'SNOWPARK_MULTIPLY_INTEGER_BY_THREE'

, replace = True

, stage_location = '@UDF_STAGE'

)

There are a few things to break down here to confirm our understanding:

- On rows 4 and 5, we have defined a very simple function in Python which multiplies an input number by three.

- The name of our function on row 15 matches that of our Python function defined on row 4.

- On row 16, we can see that our function will return an integer as it uses

IntegerType(), which we can also see has been imported on row 11. - On row 17, we can see that the input arguments for our function is also an integer using

IntegerType(). This is already imported on row 11.

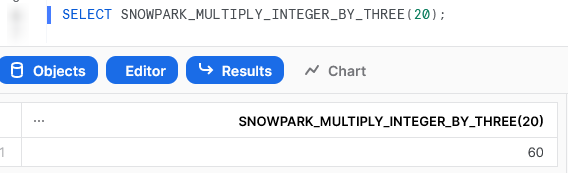

If we execute this code as part of a Python script, we can then call the function using the snowpark_session.sql().show() method to see the result.

We can also call the function from within a Snowflake worksheet in the same way that we would any other function to see the result.

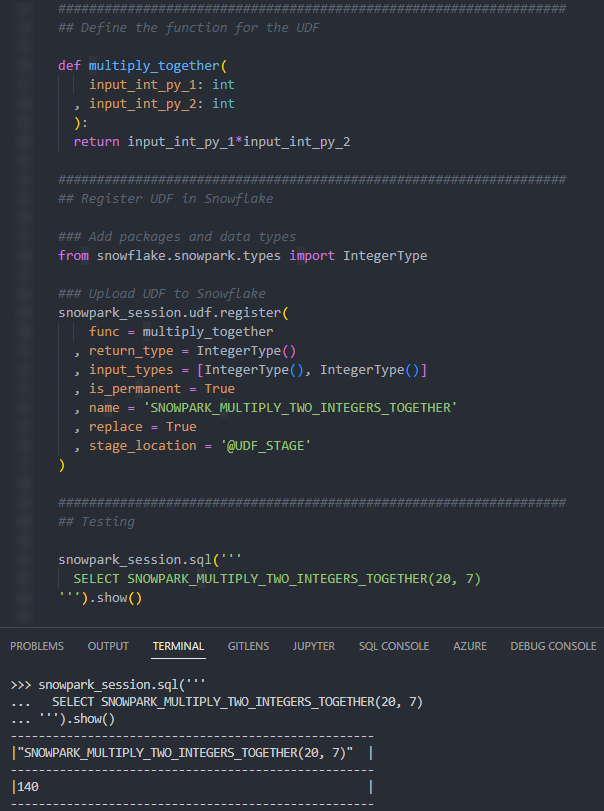

Multiply Two Input Integers Together

Our second example is another simple function, this time taking two input integers and multiplying them together. Let’s see the code:

##################################################################

## Define the function for the UDF

def multiply_together(

input_int_py_1: int

, input_int_py_2: int

):

return input_int_py_1*input_int_py_2

##################################################################

## Register UDF in Snowflake

### Add packages and data types

from snowflake.snowpark.types import IntegerType

### Upload UDF to Snowflake

snowpark_session.udf.register(

func = multiply_together

, return_type = IntegerType()

, input_types = [IntegerType(), IntegerType()]

, is_permanent = True

, name = 'SNOWPARK_MULTIPLY_TWO_INTEGERS_TOGETHER'

, replace = True

, stage_location = '@UDF_STAGE'

)

There are a lot of similarities between this and our previous function; however, this time, we have multiple inputs.

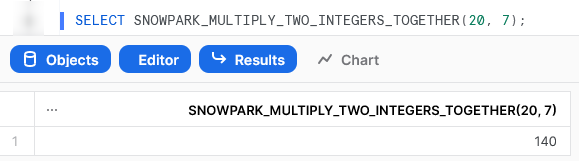

Again, if we execute this code as part of a Python script, we can then call the function using the snowpark_session.sql().show() method to see the result.

Again, we can also call the function from within a Snowflake worksheet in the same way that we would any other function to see the result.

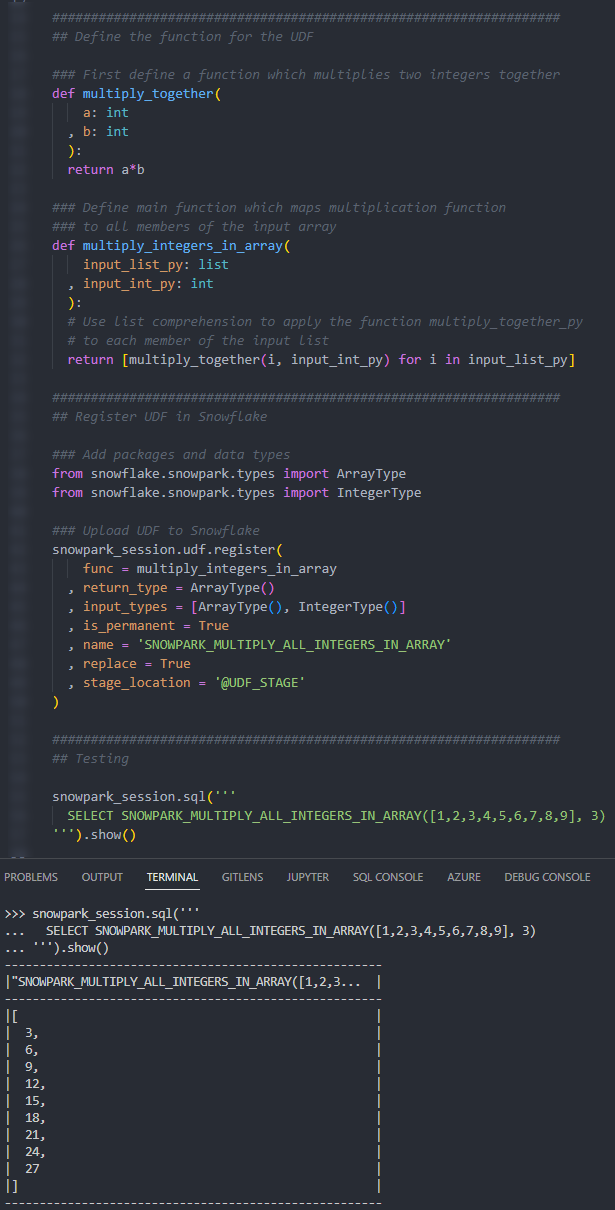

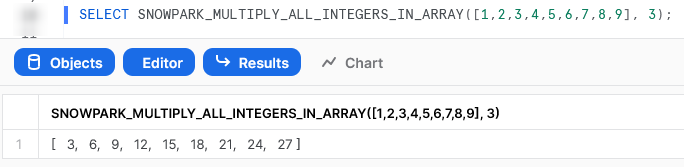

Multiply All Integers in an Input Array by Another Integer

The last of our simple examples takes things one step further so that we can introduce an additional Python function in our script and demonstrate the power of list comprehension. This UDF will accept an array of integers as an input along with a second input of a single integer. The function will multiply all members of the array by that second integer.

Let’s see the code:

##################################################################

## Define the function for the UDF

### First define a function which multiplies two integers together

def multiply_together(

a: int

, b: int

):

return a*b

### Define main function which maps multiplication function

### to all members of the input array

def multiply_integers_in_array(

input_list_py: list

, input_int_py: int

):

# Use list comprehension to apply the function multiply_together_py

# to each member of the input list

return [multiply_together(i, input_int_py) for i in input_list_py]

##################################################################

## Register UDF in Snowflake

### Add packages and data types

from snowflake.snowpark.types import ArrayType

from snowflake.snowpark.types import IntegerType

### Upload UDF to Snowflake

snowpark_session.udf.register(

func = multiply_integers_in_array

, return_type = ArrayType()

, input_types = [ArrayType(), IntegerType()]

, is_permanent = True

, name = 'SNOWPARK_MULTIPLY_ALL_INTEGERS_IN_ARRAY'

, replace = True

, stage_location = '@UDF_STAGE'

)

There are several things to note here:

- On row 31, we can see that we are now expecting an array as an output instead of an integer. To support this, we leverage the

ArrayType(), which is imported on row 25. - On row 26, we must still import the

IntegerType()as this is leveraged in our list of inputs on row 32. - On rows 5-9, we are defining a second Python function called

multiply_together, which is leveraged by our main function that we define on rows 13-19. This is not strictly needed as we could use a lambda function; however, I have included it to demonstrate the functionality. - On row 19, we use the concept of list comprehension to apply our

multiply_togetherfunction to every member of our input array

Again, if we execute this code as part of a Python script, we can then call the function using the snowpark_session.sql().show() method to see the result.

Again, we can also call the function from within a Snowflake worksheet, in the same way that we would any other function, to see the result.

Importing Additional Libraries from Anaconda

Even though our examples so far have all been fairly basic, we can already start to see how powerful Python UDFs could be. Not only are we able to receive inputs and use them to produce outputs, we can also define our own functionality within the Python script and perform more complex logical steps when executing our function.

What about if we wish to use Python libraries that are not part of the standard inbuilt set? For example, what if we wish to leverage Pandas, PyTorch or a wide range of other popular libaries? The good news here is that Snowflake have partnered with Anaconda, and you already have everything you need to leverage any of the libraries listed in Anaconda’s Snowflake channel.

If you wish to leverage any libraries that are not included in Anaconda’s Snowflake channel, including any libraries you have developed in-house, then you will need to import them separately. This will be discussed in the next section.

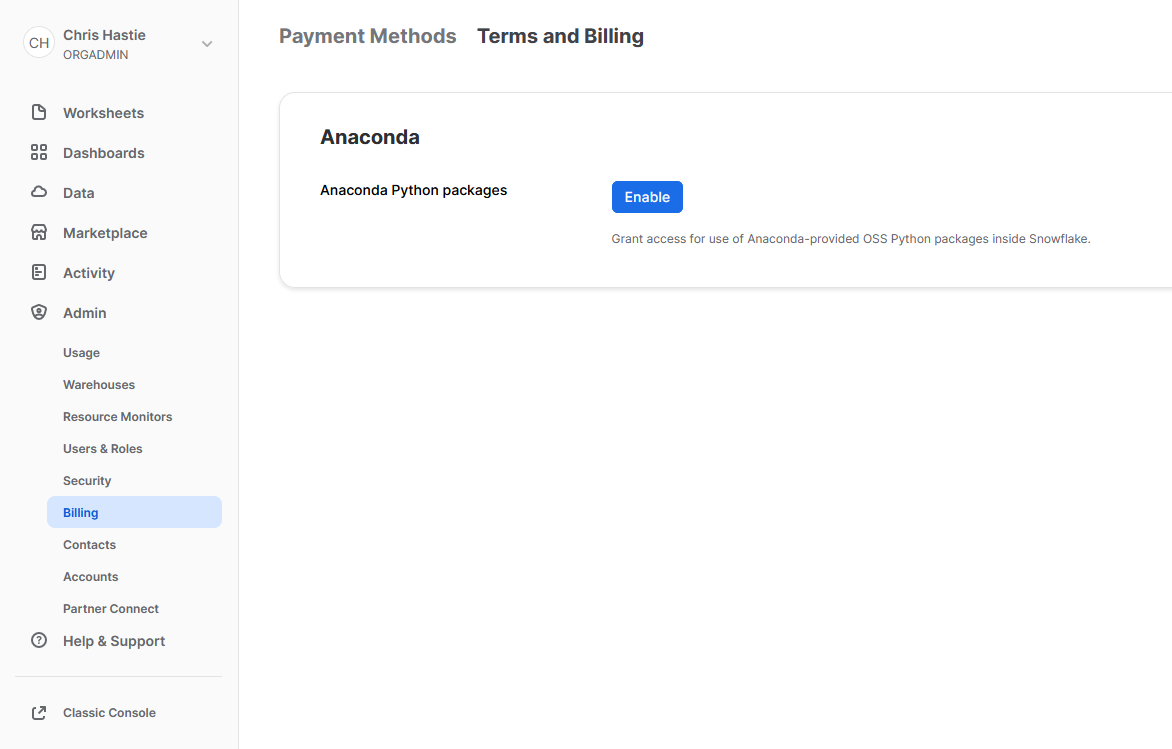

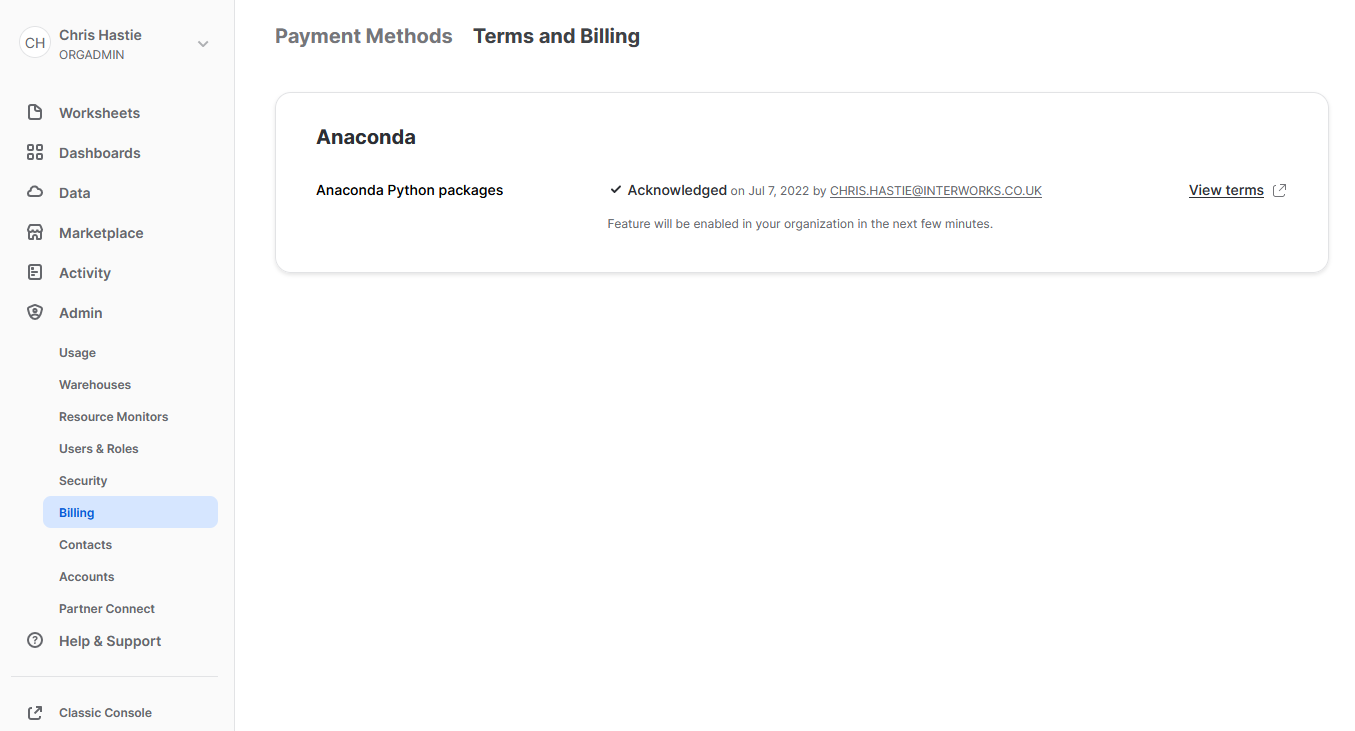

Accepting the Terms of Usage to Enable Third-Party Packages

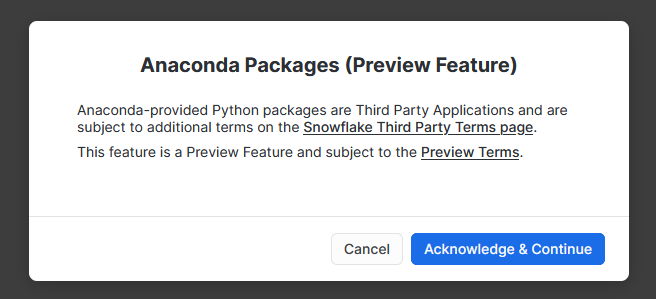

To leverage third-party packages from Anaconda within Snowflake, an ORGADMIN must first accept the third-party terms of usage. I’ll walk through the process now; however, more details can be found here for those who desire it. This step must only be completed once for the entire organisation.

- Using the ORGADMIN role in the SnowSight UI, navigate to Admin > Billing to accept the third party terms of usage

- Confirm acknowledgement

- The screen will then update to reflect the accepted terms

Examples Using Supported Third-Party Libraries

Now that we have enabled third-party libraries for our organisation, we can show some more interesting examples.

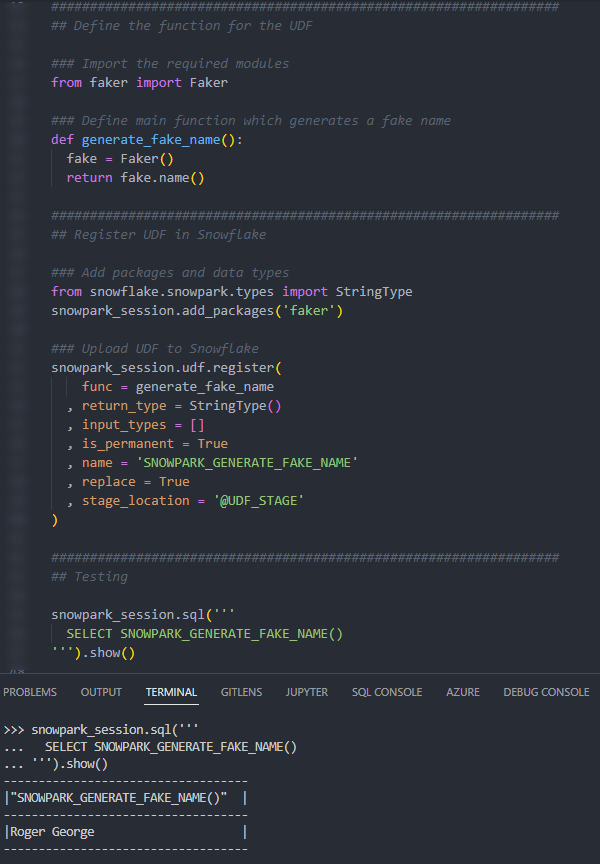

Generate a Random Name

This function imports the faker library to generate fake data. This is a useful tool for creating dummy datasets. Let’s see the code:

##################################################################

## Define the function for the UDF

### Import the required modules

from faker import Faker

### Define main function which generates a fake name

def generate_fake_name():

fake = Faker()

return fake.name()

##################################################################

## Register UDF in Snowflake

### Add packages and data types

from snowflake.snowpark.types import StringType

snowpark_session.add_packages('faker')

### Upload UDF to Snowflake

snowpark_session.udf.register(

func = generate_fake_name

, return_type = StringType()

, input_types = []

, is_permanent = True

, name = 'SNOWPARK_GENERATE_FAKE_NAME'

, replace = True

, stage_location = '@UDF_STAGE'

)

The core difference between this UDF and our previous examples are:

- On row 11, we use standard Python functionality to import the required tools from the

fakerlibrary, which are then leveraged in our Python function. - On row 17, we instruct our Snowflake Snowpark Session to leverage the

fakerlibrary. As this is a supported third-party package, this is all we need to do. - In our input on row 16 and our return type on row 22, we can see an example of using

StringType().

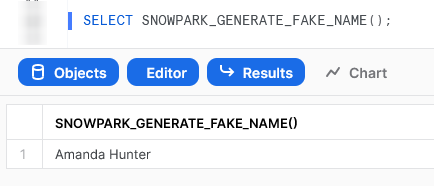

Again, if we execute this code as part of a Python script, we can then call the function using the snowpark_session.sql().show() method to see the result.

Again, we can also call the function from within a Snowflake worksheet in the same way that we would any other function to see the result.

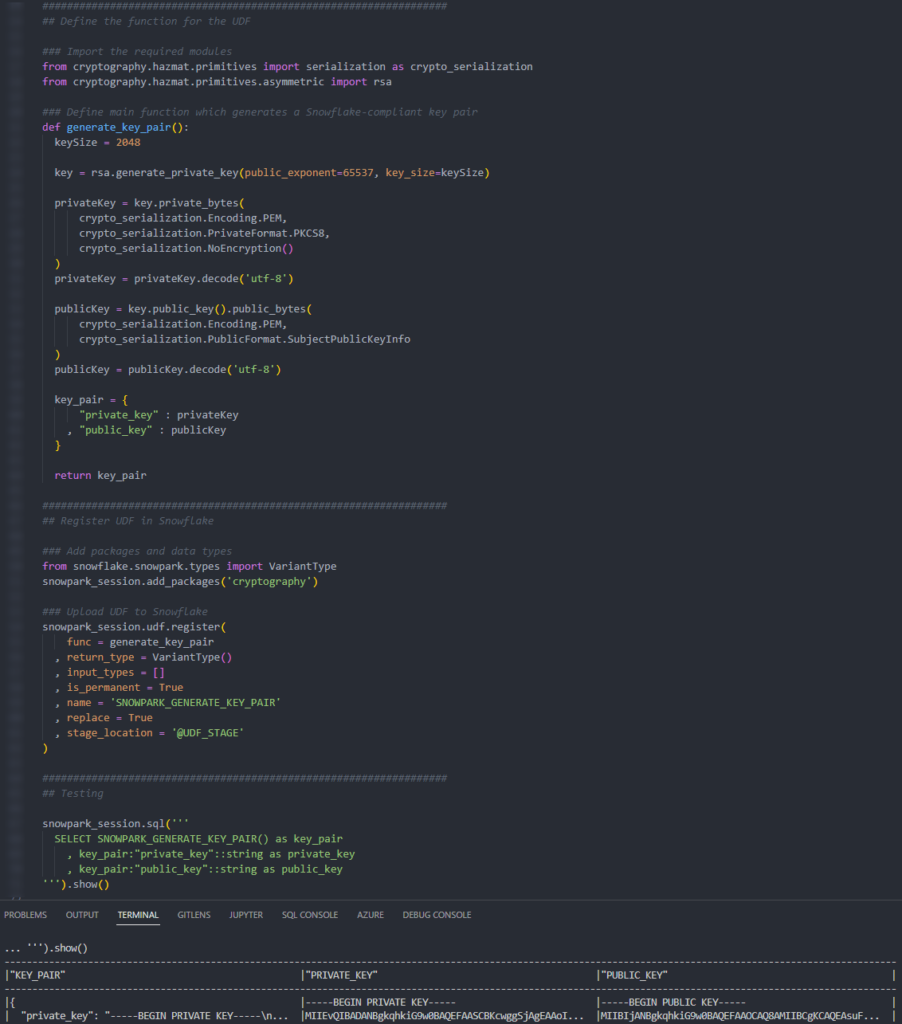

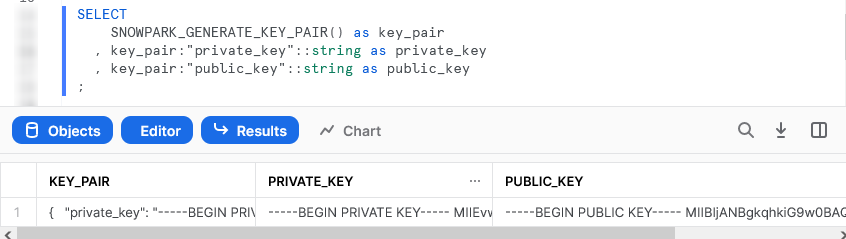

Generate a Snowflake-Compliant Key Pair

This function imports the cryptography library to generate an authentication key pair that is compliant with Snowflake. I have discussed the benefits of this in a previous blog; however, this new Python functionality renders the technical side of this blog redundant!

It is important to note that this specific approach is not best practice as unintended users may be able to access the private key from Snowflake’s history; however, I will resolve this in an upcoming blog post.

Let’s see the code:

##################################################################

## Define the function for the UDF

### Import the required modules

from cryptography.hazmat.primitives import serialization as crypto_serialization

from cryptography.hazmat.primitives.asymmetric import rsa

### Define main function which generates a Snowflake-compliant key pair

def generate_key_pair():

keySize = 2048

key = rsa.generate_private_key(public_exponent=65537, key_size=keySize)

privateKey = key.private_bytes(

crypto_serialization.Encoding.PEM,

crypto_serialization.PrivateFormat.PKCS8,

crypto_serialization.NoEncryption()

)

privateKey = privateKey.decode('utf-8')

publicKey = key.public_key().public_bytes(

crypto_serialization.Encoding.PEM,

crypto_serialization.PublicFormat.SubjectPublicKeyInfo

)

publicKey = publicKey.decode('utf-8')

key_pair = {

"private_key" : privateKey

, "public_key" : publicKey

}

return key_pair

##################################################################

## Register UDF in Snowflake

### Add packages and data types

from snowflake.snowpark.types import VariantType

snowpark_session.add_packages('cryptography')

### Upload UDF to Snowflake

snowpark_session.udf.register(

func = generate_key_pair

, return_type = VariantType()

, input_types = []

, is_permanent = True

, name = 'SNOWPARK_GENERATE_KEY_PAIR'

, replace = True

, stage_location = '@UDF_STAGE'

)

Similar to our last example, the core difference between this UDF and our basic examples are:

- On rows 5 and 6, we use standard Python functionality to import the required tools from the

cryptographylibrary, which are then leveraged in our Python function - On row 39, we instruct our Snowflake Snowpark Session to leverage the

cryptographylibrary. As this is a supported third-party package, this is all we need to do. - In our input on row 38 and our return type on row 44, we can see an example of using

VariantType().

Again, if we execute this code as part of a Python script, we can then call the function using the snowpark_session.sql().show() method to see the result.

Again, we can also call the function from within a Snowflake worksheet in the same way that we would any other function to see the result.

Importing Files and Libraries via a Stage

So far, we have demonstrated quite a lot in our miniature crash course into Python UDFs in Snowflake. Before we wrap up, I’d like to cover one last piece, which is how to import libraries that are not covered by the Anaconda Snowflake channel and how to import other external files.

For these examples, we will be uploading all of our required files with the snowpark_session.add_import() method.

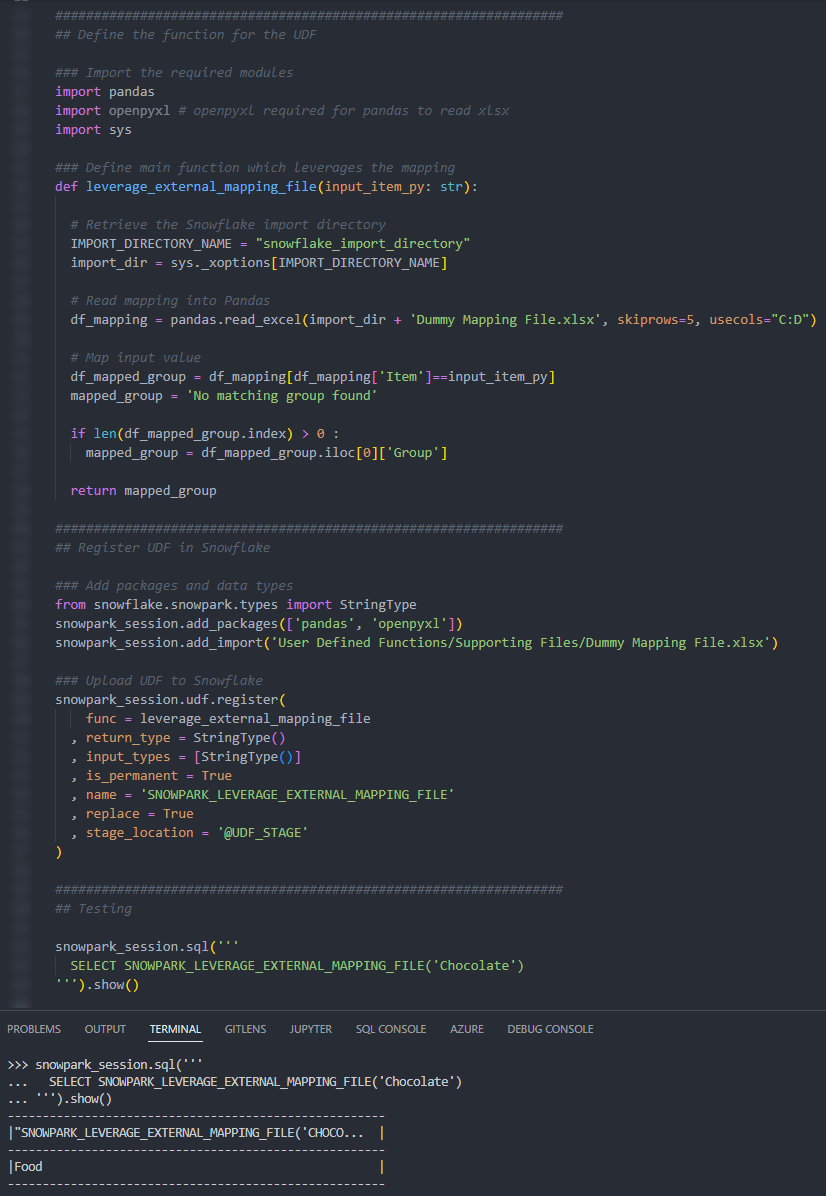

Import an External Excel xlsx File

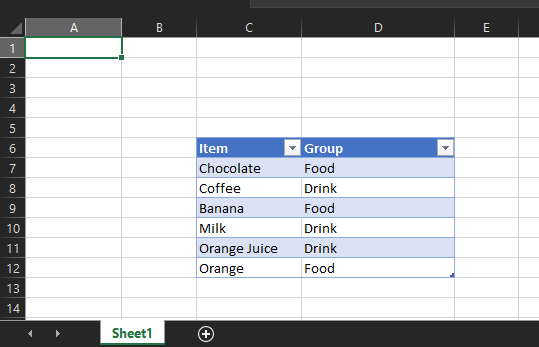

This example reads an Excel xlsx file into a pandas dataframe, then uses information in that file to map the input string to the corresponding output.

The file we will be leveraging is a very simple mapping file that looks like this:

The file is called “Dummy Mapping File.xlsx” and sits on our local machine in the following relative directory path:

User Defined Functions\Supporting Files\

Here is the code for the UDF itself:

##################################################################

## Define the function for the UDF

### Import the required modules

import pandas

import openpyxl # openpyxl required for pandas to read xlsx

import sys

### Define main function which leverages the mapping

def leverage_external_mapping_file(input_item_py: str):

# Retrieve the Snowflake import directory

IMPORT_DIRECTORY_NAME = "snowflake_import_directory"

import_dir = sys._xoptions[IMPORT_DIRECTORY_NAME]

# Read mapping into Pandas

df_mapping = pandas.read_excel(import_dir + 'Dummy Mapping File.xlsx', skiprows=5, usecols="C:D")

# Map input value

df_mapped_group = df_mapping[df_mapping['Item']==input_item_py]

mapped_group = 'No matching group found'

if len(df_mapped_group.index) > 0 :

mapped_group = df_mapped_group.iloc[0]['Group']

return mapped_group

##################################################################

## Register UDF in Snowflake

### Add packages and data types

from snowflake.snowpark.types import StringType

snowpark_session.add_packages(['pandas', 'openpyxl'])

snowpark_session.add_import('User Defined Functions/Supporting Files/Dummy Mapping File.xlsx')

### Upload UDF to Snowflake

snowpark_session.udf.register(

func = leverage_external_mapping_file

, return_type = StringType()

, input_types = [StringType()]

, is_permanent = True

, name = 'SNOWPARK_LEVERAGE_EXTERNAL_MAPPING_FILE'

, replace = True

, stage_location = '@UDF_STAGE'

)

The unique differences for this example are:

- On rows 13 and 14 within our Python function, we leverage the

syslibrary to access the location where Snowflake stores files that have been imported into the UDF. This is potentially the most useful snippet of code in this blog I found it to be the hardest thing to find in the documentation! - Row 34 includes a line to import the file from our local directory into our Snowflake Snowpark Session. This is a critical component for our Python function to be able to access the file during the

pandas.from_excel()function on row 17. - On row 33 we also demonstrate adding multiple packages in a single line.

The rest of the code in this script is specific Python code to download the Excel file into a dataframe, filter it to our specific item and return the matched group value.

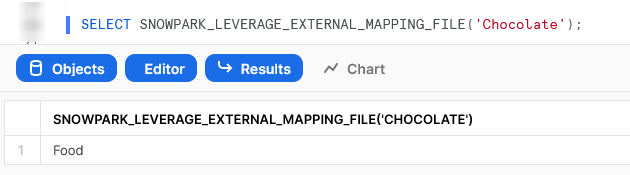

Again, if we execute this code as part of a Python script, we can then call the function using the snowpark_session.sql().show() method to see the result.

Again, we can also call the function from within a Snowflake worksheet in the same way that we would any other function to see the result.

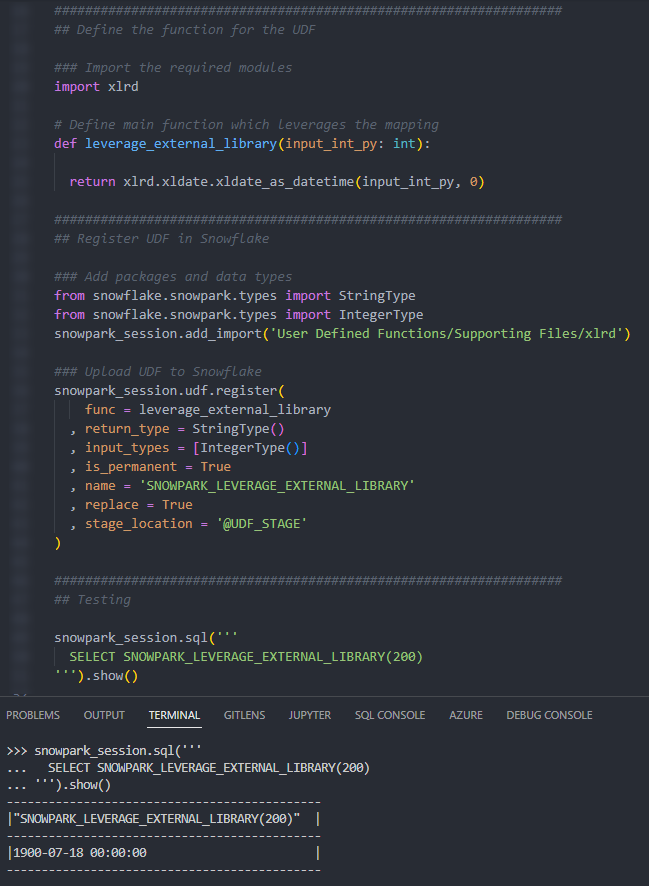

Import an Non-Standard External Library

This example is designed to leverage the xldate function of the xlrd library. This library is not included in the standard set of supported libraries, so it must be imported specifically.

Let’s see the code:

##################################################################

## Define the function for the UDF

### Import the required modules

import xlrd

# Define main function which leverages the mapping

def leverage_external_library(input_int_py: int):

return xlrd.xldate.xldate_as_datetime(input_int_py, 0)

##################################################################

## Register UDF in Snowflake

### Add packages and data types

from snowflake.snowpark.types import StringType

from snowflake.snowpark.types import IntegerType

snowpark_session.add_import('User Defined Functions/Supporting Files/xlrd')

### Upload UDF to Snowflake

snowpark_session.udf.register(

func = leverage_external_library

, return_type = StringType()

, input_types = [IntegerType()]

, is_permanent = True

, name = 'SNOWPARK_LEVERAGE_EXTERNAL_LIBRARY'

, replace = True

, stage_location = '@UDF_STAGE'

)

The unique differences for this example are:

- Row 18 includes a line to import the package from our local directory into our Snowflake Snowpark Session. This is a critical component for our Python function to be able to access the file during the

xlrd.xldate.xldate_as_datetime()function on row 10.

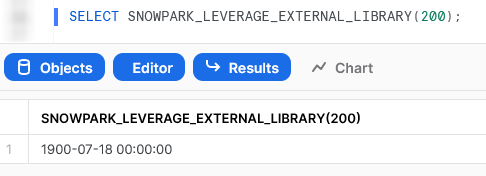

Again, if we execute this code as part of a Python script, we can then call the function using the snowpark_session.sql().show() method to see the result.

Again, we can also call the function from within a Snowflake worksheet in the same way that we would any other function to see the result.

Wrap Up

So, there we have it. We have covered a lot of content in this post, and some of these UDFs could potentially have their own blog post all on their own! My goal is to demonstrate how to create UDFs for different scenarios and provide that in a single post so that it is easy for readers to find and refer back to if needed.

I hope this helps you get started on your own journey with Python UDFs in Snowflake via Snowpark. Let us know what cool things you come up with. It’s always great to see new functionality lead to productive and useful innovation.

If you wish to see the code for all of this and other content that we have, you can find it on the InterWorks GitHub.

If you are interested in finding out about Python stored procedures, be sure to check out my matching “Definitive Guide to Creating Python Stored Procedures in Snowflake using SnowPark,” which is coming soon!