This series shows you the various ways you can use Python within Snowflake.

Python is a first class language in the Snowflake eco system, and there are multiple ways to use Python with Snowflake:

- Snowflake Connector for Python: Listed in Snowflake drivers, this is a standard connector to Snowflake written using Python. It supports developing applications using the Python Database API v2 specification (PEP-249) which include familiar objects like connection and cursors.

- Newer Snowflake Python API: Although Snowflake Connector for Python supports the Python Database API specification, and it’s good for querying and performing DDL/DML operations, it’s not a “pythonic” way to interact with Snowflake, as every interaction with Snowflake must be written in SQL. The newer Snowflake Python API aims to solve that. It is intended to provide comprehensive APIs for interacting with Snowflake resources across data engineering, Snowpark, Snowpark ML and application workloads using a first-class Python API.

- Lastly, Snowpark API for Python: Snowpark API aims to solve what the first two APIs cannot – querying and processing data at scale inside Snowflake without moving it. To be fair, Snowpark is available for three languages – Java, Scala and Python.

It is important to understand the strengths and differences with each of the Python APIs. The conventional Python connector for Snowflake is a minimal library where one cannot create dedicated Python objects for corresponding Snowflake objects, and everything is communicated through SQL. It’s not possible to have an object for a database for example. It will be just queries and results.

But newer Snowflake functionality includes many more exciting features and objects like tasks or ML models or compute pools etc. Won’t it be great to have an instance of a particular object in Python code itself and then to work with it in a standard pythonic way? That’s the gap which the newer library tries to fill. It provides native Python objects for every Snowflake constructs.

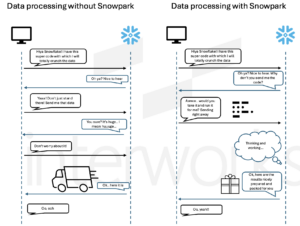

While working with any of the above libraries, it’s important to understand that both these libraries are pulling the data out of Snowflake and processing it wherever the code is running. If it’s a data intensive use case, then a lot of data is leaving Snowflake and being processed locally, which may not yield the optimum result. Snowpark is designed to circumvent that. Instead of bringing data to the code, code is brought to the data.

1. Advantages of Python within Snowflake

When citing advantages of Python within Snowflake, it is safe to assume that the API library in question is the Snowpark API library. Even Snowpark has two different execution modes. One can write Snowpark code as a local application by importing Snowpark library in their own Python application. Alternatively, code can be directly written using native Snowflake objects like user defined (tabular) functions (UDFs or UDTFs) or stored procedures (SPs) in Snowflake. In the client side implementation using Snowpark, all the code except the Snowpark code is run on the client side, whereas the Snowpark code is run on the serverless compute resources in Snowflake through a session. When Snowpark is used to create a UD(T)F or a SP directly in Snowflake, the entire code is both stored and run in Snowflake.

In both cases, the application benefits from the scalability of Snowflake and reduced data transfer due to the execution of data related code directly in Snowflake.

Moreover, the Python UD(T)Fs and SPs created using Snowpark can be called directly from functions and procedures written in other Snowpark supported languages or even in a SQL query. Snowflake will automatically handle the interchange of data and types.

Further reading:

- A Definitive Guide to Python UDFs in the Snowflake UI

- A Definitive Guide to Python Stored Procedures in the Snowflake UI

- A Definitive Guide to Snowflake Sessions with Snowpark for Python

2. Can Python be used without Snowpark?

Yes. One can use the traditional or the newer Python libraries for Snowflake to interact with Snowflake without using Snowpark.

3. Difference between using Snowpark and Anaconda. Does one have an advantage over the other? Can one be used over the other? What are the pros and cons?

Snowpark and Anaconda are not directly comparable. Snowpark is a framework and one of the framework implementations is a Python library for Snowpark. Anaconda, on the other hand, is a distribution of Python. A distribution of Python includes the Python interpreter, runtime and system libraries. Along with that, Anaconda is also a Python package repository which hosts additional packages for Python. Unlike the well-known Python package index PyPi, Anaconda has different acceptance criteria for packages to be included in their package repository.

The key way in which Snowpark and Anaconda are related is through Anaconda’s Snowflake Channel. This channel hosts all the third-party packages that are natively available to Snowflake UD(T)Fs and Stored Procedures in a Snowpark Python environment. The channel can also be used with a local Anaconda installation to set up a local Python environment that matches Snowflake’s own internal environment, which is useful when developing or testing processes locally before pushing them up to Snowflake. More information here.

4. What packages are available in Snowpark, and can you turn them off?

To find out what packages are available in Snowpark, check out the Snowflake channel for Anaconda: Snowflake Snowpark for Python (anaconda.com). From command line client Snowflake CLI, use package lookup command.

To turn off certain packages, use packages policies.

5. Difference of using Python on-prem over Snowpark

The differences are same as using the standard Snowflake Python libraries mentioned above.

6. What controls (security and otherwise) does Snowpark have so that people don’t download malicious packages for Python?

Packages policies can be created and applied account wide in Snowflake to specify exactly which third party packages can be used with Snowpark. Besides that, Snowpark Python has inherent security restrictions that connecting to outside host is not permitted without creating special integration objects first. Also, writing to permanent file systems outside of Snowflake stages is prohibited (Security practices).

An implementation example could be found in Snowflake External Access: Trigger Matillion DPC Pipelines.

7. Any other IT security concerns with Snowpark?

Snowpark runs on a restricted Python runtime which is further secured by Snowflake’s access control model and base infrastructure. It’s much more secure to run Python code in Snowflake than to run outside of it.

More Questions?

Reach out to us here if you have more questions, or want to work with us on your Snowflake and Python needs.