If you have data stored in an Azure Storage container and wish to ingest it into Snowflake using native functionality, you will most likely be considering either direct COPY INTO statements or an automated Snowpipe to ingest the data. Both of these options would expect to use a Snowflake object called an external stage, which facilitates access between Snowflake and Azure.

You can find more information on stages in this previous post if desired.

Whilst it is possible to include authenticating credentials directly in the configuration of a Snowflake stage, it is recommended to instead leverage Storage Integrations to keep your stages and your authentication separate. With this approach, the available storage containers can be restricted at the integration layer, and users can leverage the integration when creating their stages. This has the additional benefit that users can only create stages that connect to storage containers which are authorised by the storage integration and are prevented from accessing other storage containers.

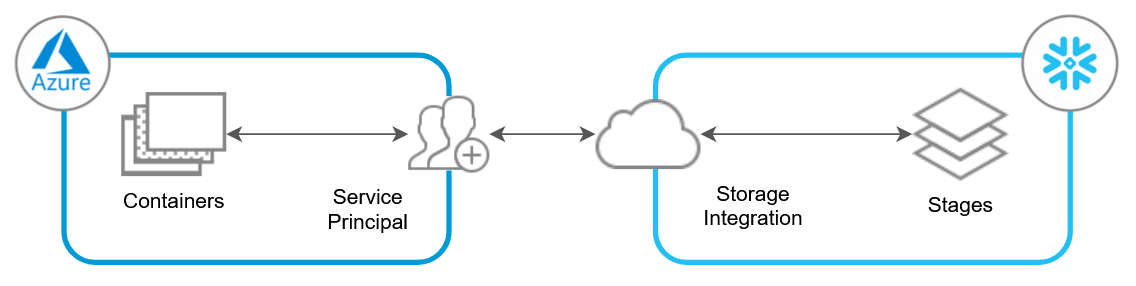

Flow Diagram

The following diagram provides a very simple overview of the flow involved for a storage integration between Snowflake and Azure.

Requirements

To create a storage integration between Snowflake and Azure, the following components are required:

- Storage container(s) in Azure that you would like Snowflake to access

- Snowflake

ACCOUNTADMINrole, or another role with theCREATE INTEGRATIONprivilege - Sufficient access in Azure to approve the creation of a new service principal and grant it the required access to the storage container(s)

Step 1: Retrieve Azure Information

Before we can create a storage integration, we need to know the location of the resources in Azure. Most importantly, we need to know the Azure Tenant ID. We also require the location of any specific storage container in Azure.

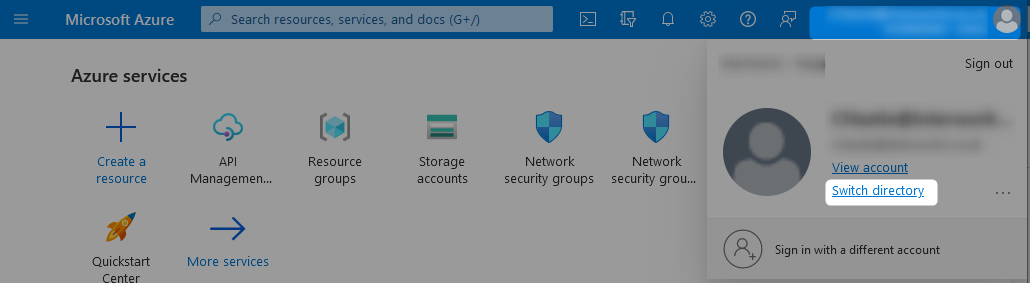

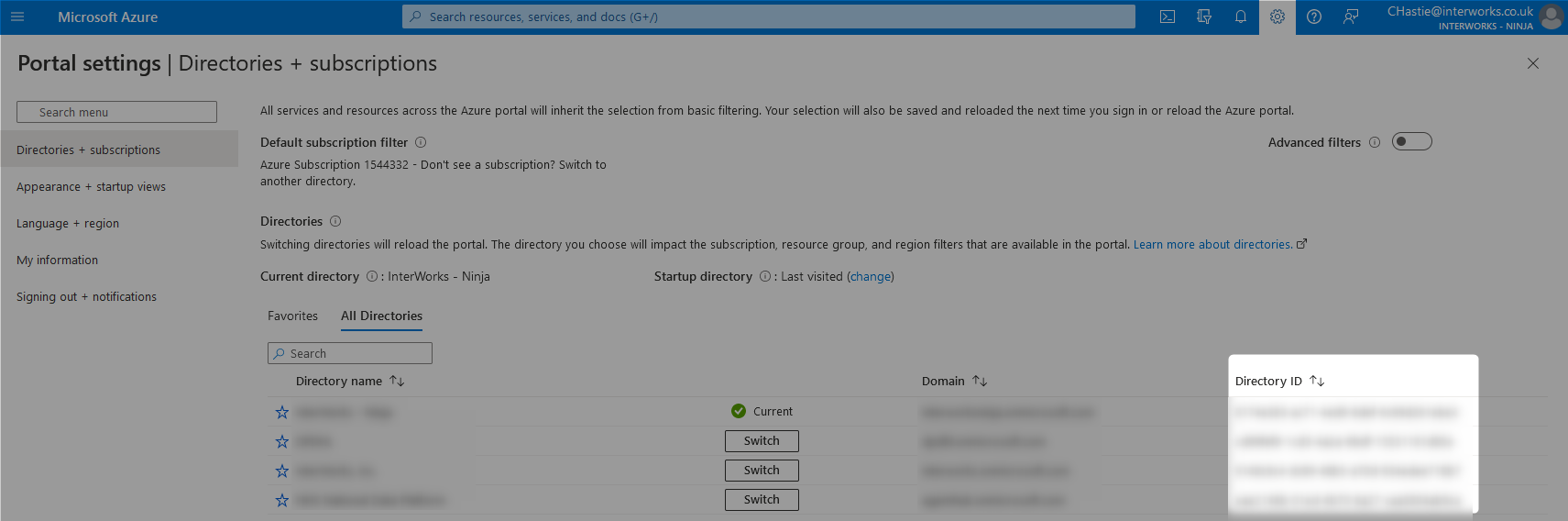

Step 1a: Retrieve the Azure Tenant ID

There are many ways to retrieve the ID for a given Azure tenant. My personal favourite method is by attempting to switch directory within the Azure portal as this provides the “Directory ID” for each Azure tenant. In this case, the “Directory ID” is the tenant ID that we are looking for.

The following pair of screenshots demonstrate this method:

You may find it easier to record your Azure Tenant ID for later reference in a text file or similar.

Our important demo variables so far:

Azure Tenant ID: 1234a567-bc89-1ab2-3cde-4f56a789bcd1

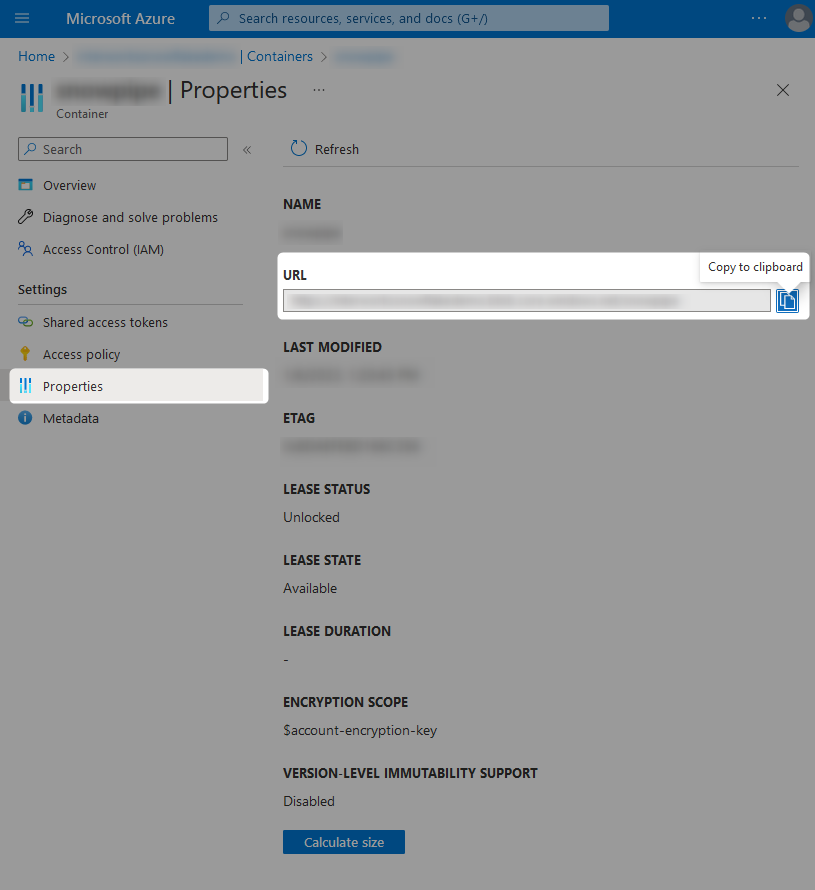

Step 1b: Retrieve the URL for Each Storage Container

For each storage container that you would like Snowflake to access, you must retrieve the URL. Personally, I find it easiest to retrieve these through the Azure portal by opening the specific container and visiting the Properties pane.

The following screenshot demonstrates this method:

As with the Azure Tenant ID, you may find it easier to record these URLs for later reference in a text file or similar.

Our important demo variables so far:

Azure Tenant ID: 1234a567-bc89-1ab2-3cde-4f56a789bcd1 Storage Container URL 1: azure://mystorageaccount.blob.core.windows.net/my-storage-container-1 Storage Container URL 2: azure://mystorageaccount.blob.core.windows.net/my-storage-container-2

It is important to note at this time that we place

azure://at the start of the URL instead ofhttps://as Snowflake expects this format.

Step 2: Create the Storage Integration Object in Snowflake

As mentioned above, this process can only be achieved using the Snowflake

ACCOUNTADMINrole, or another role with theCREATE INTEGRATIONprivilege.

We now have the required variables to create our storage integration. This is achieved by executing a SQL script within Snowflake with the following template:

CREATE STORAGE INTEGRATION <INTEGRATION_NAME>

TYPE = EXTERNAL_STAGE

STORAGE_PROVIDER = 'AZURE'

ENABLED = TRUE

AZURE_TENANT_ID = '<retrieved in step 1a>'

STORAGE_ALLOWED_LOCATIONS = (

'azure://<storage account>.blob.core.windows.net/<storage container 1>/'

, 'azure://<storage account>.blob.core.windows.net/<storage container 2>/'

)

;

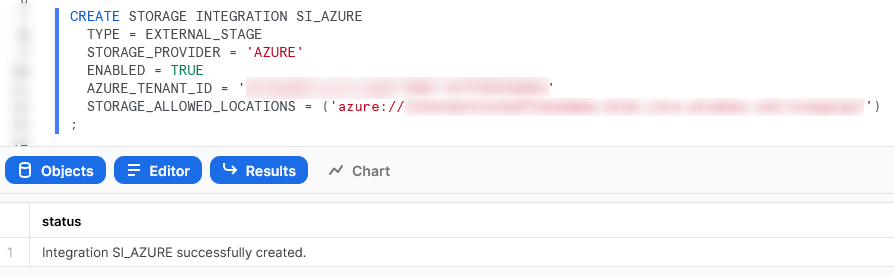

Entering our demo variables, we can create a new storage integration called SI_AZURE with the following script:

CREATE STORAGE INTEGRATION SI_AZURE

TYPE = EXTERNAL_STAGE

STORAGE_PROVIDER = 'AZURE'

ENABLED = TRUE

AZURE_TENANT_ID = '1234a567-bc89-1ab2-3cde-4f56a789bcd1'

STORAGE_ALLOWED_LOCATIONS = (

'azure://mystorageaccount.blob.core.windows.net/my-storage-container-1'

, 'azure://mystorageaccount.blob.core.windows.net/my-storage-container-2'

)

;

Executing this form of statement in Snowflake will yield the following result:

At this stage, the Storage Integration object exists in Snowflake but it cannot access Azure. It must be authorised via Azure itself.

Step 3: Authorise the Snowflake Storage Integration in Azure

Now that we have created our Storage Integration in Snowflake, we must authorise it in Azure.

Step 3a: Retrieve the Required Information About the Snowflake Storage Integration

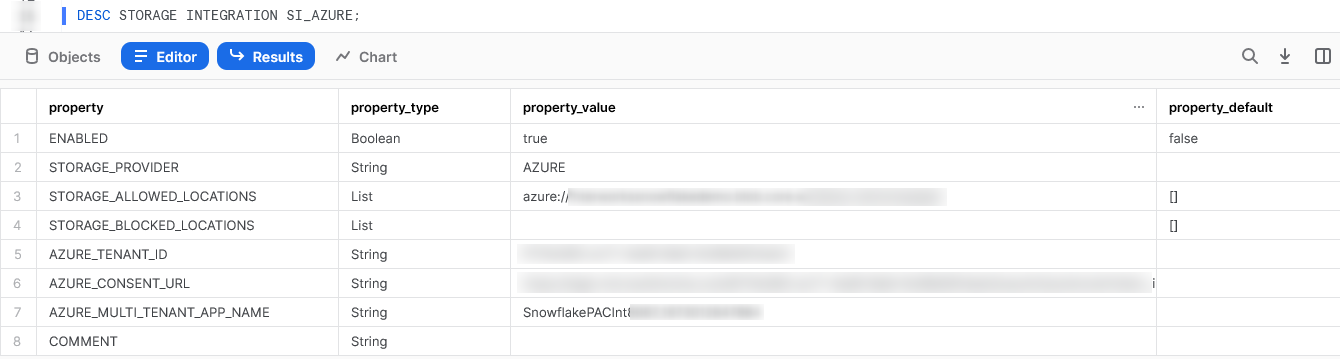

Snowflake helpfully provides the information we need in the output of a DESCRIBE command of the following template:

DESCRIBE STORAGE INTEGRATION <INTEGRATION_NAME>;

For example, we can execute the following statement to see the details for our new SI_AZURE storage integration:

The important information that we require here are the AZURE_CONSENT_URL and the AZURE_MULTI_TENANT_APP_NAME fields. As with the previous variables, you may find it easier to record these for later reference in a text file or similar.

Our important demo variables so far:

Azure Tenant ID: 1234a567-bc89-1ab2-3cde-4f56a789bcd1 Storage Container URL 1: azure://mystorageaccount.blob.core.windows.net/my-storage-container-1 Storage Container URL 2: azure://mystorageaccount.blob.core.windows.net/my-storage-container-2 AZURE_CONSENT_URL: https://login.microsoftonline.com/1234a567-bc89-1ab2-3cde-4f56a789bcd1/oauth2/authorize?client_id=a123bcd4-1234-1a23-bcd4-1a23b45678cd&response_type=code AZURE_MULTI_TENANT_APP_NAME: SnowflakePACInt1234_1234567890123

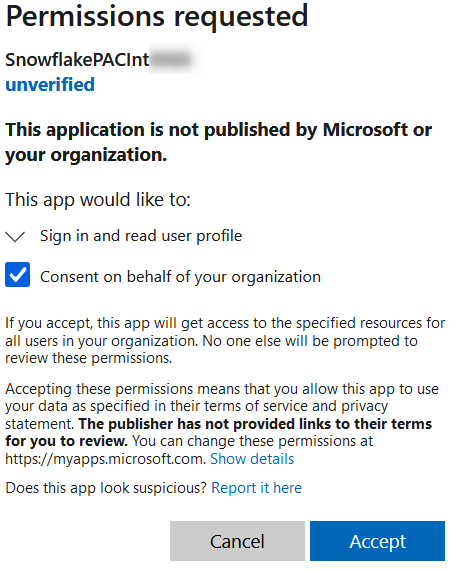

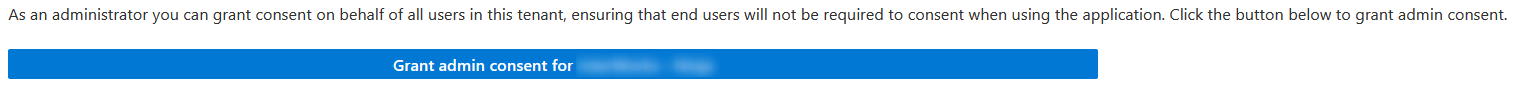

Step 3b: Approve the Authorisation in the Azure Consent URL

Access the AZURE_CONSENT_URL whilst logged into Azure as a user with admin privileges to authenticate the storage integration. After clicking on the consent URL and signing in with an administrator account, the following prompt will be displayed:

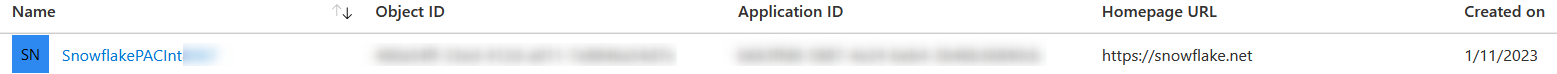

Aftering consenting on behalf of your organisation and accepting, the app will appear under Azure AD - Enterprise applications in your tenant, as shown below:

Finally, you may be prompted with the following option which you can ignore:

This has created a new service principal in your Azure tenancy with a name matching the first part of the AZURE_MULTI_TENANT_APP_NAME before the underscore. Again, you may find it easier to record these for later reference in a text file or similar.

Our important demo variables so far:

Azure Tenant ID: 1234a567-bc89-1ab2-3cde-4f56a789bcd1 Storage Container URL 1: azure://mystorageaccount.blob.core.windows.net/my-storage-container-1 Storage Container URL 2: azure://mystorageaccount.blob.core.windows.net/my-storage-container-2 AZURE_CONSENT_URL: https://login.microsoftonline.com/1234a567-bc89-1ab2-3cde-4f56a789bcd1/oauth2/authorize?client_id=a123bcd4-1234-1a23-bcd4-1a23b45678cd&response_type=code AZURE_MULTI_TENANT_APP_NAME: SnowflakePACInt1234_1234567890123 Azure Service Principal for the Storage Integration: SnowflakePACInt1234

Azure may take a few minutes to create the new service principal. Often, it is near-instant; however, it may take up to an hour depending on the performance of the platform and any other activity.

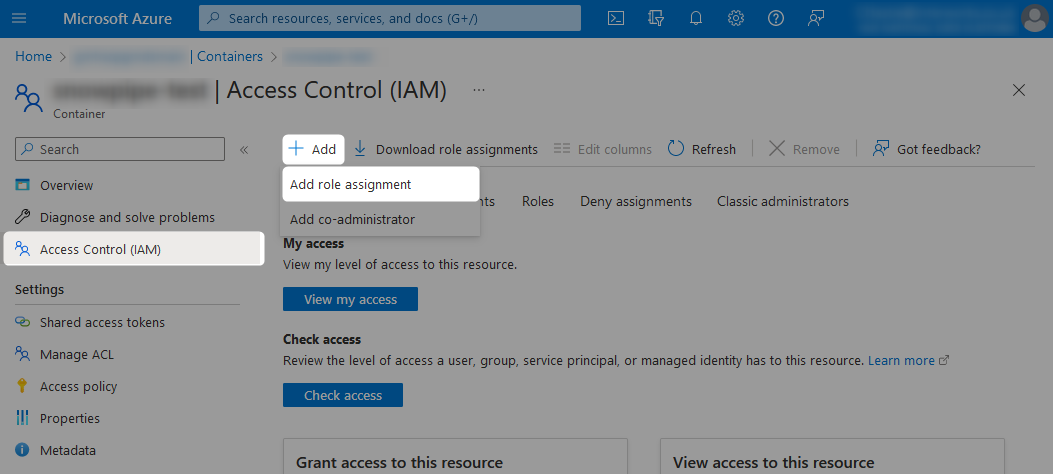

Step 3c: Grant the Azure Service Principal the Desired Access to Each Storage Container

Now that Snowflake is able to use a storage integration to leverage an Azure service principal, the only remaining step is to ensure that the Azure service principal has the desired access to each of the Azure storage containers.

To grant the required access, add a new role assignment under the Access Control (IAM) pane from within the storage container.

You may wish to grant the service principal the desired access at the storage account level instead of the container level. This is only if you want to allow access to all containers within the storage account.

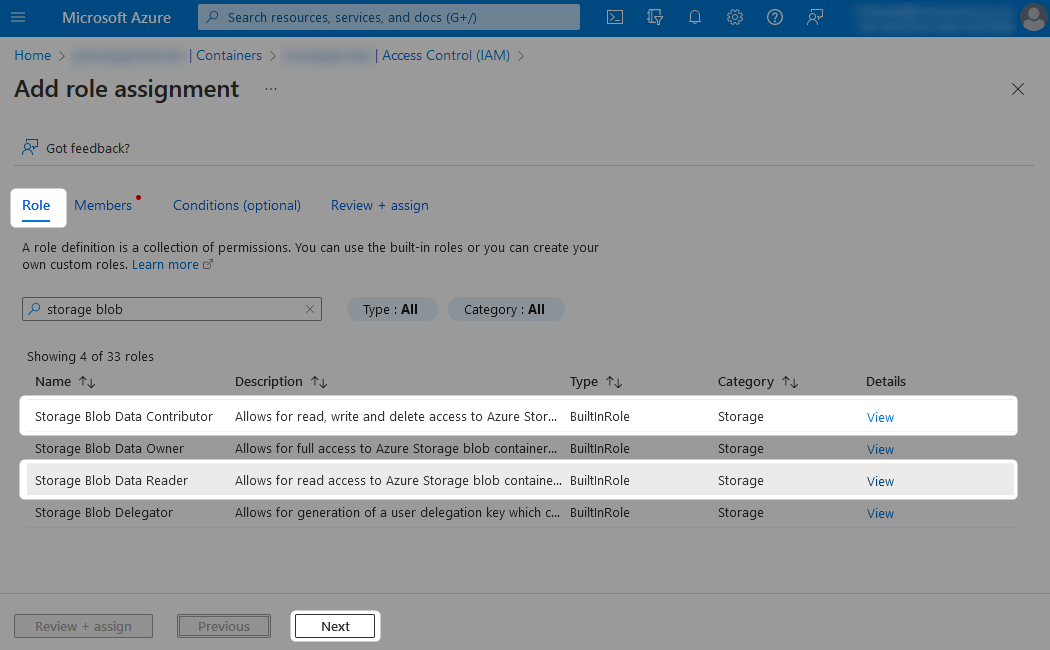

When adding the new role assignment, choose which level of access you wish to grant from the list of possible roles.

- If you wish for read access only, the

Storage Blob Data Readerrole is required - If you wish for deeper access, such as for unloading files from Snowflake into the container, then the

Storage Blob Data Contributorrole is required

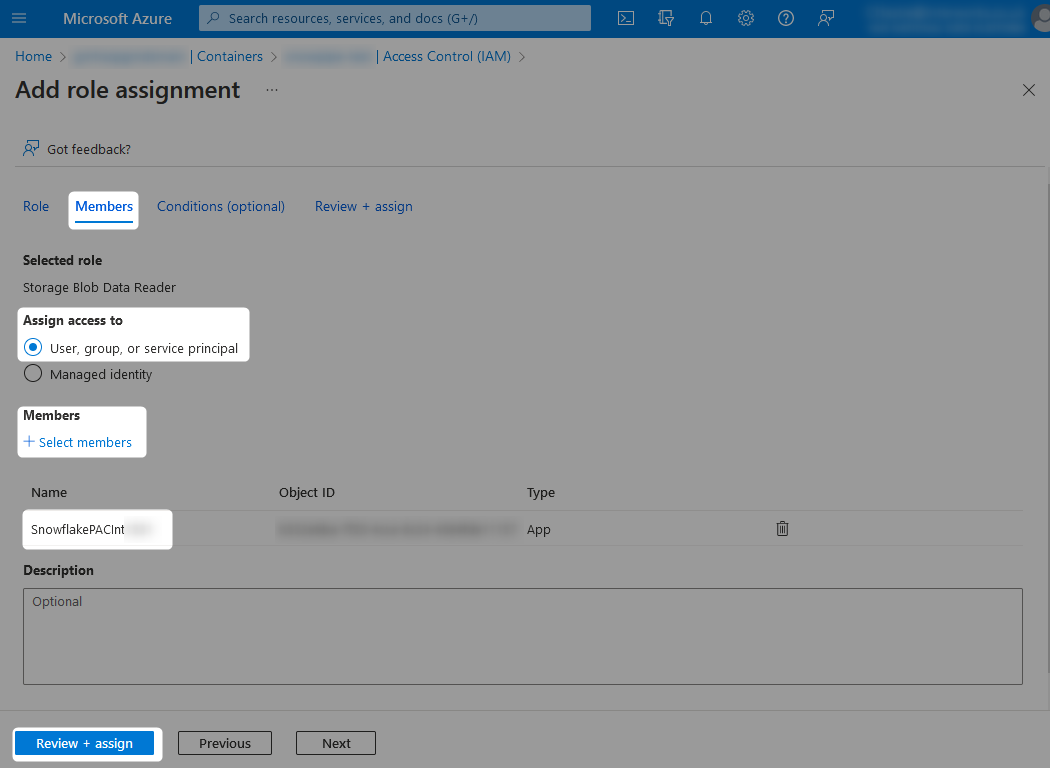

Finally, locate the service principal which you wish to grant access to. This is the Azure Service Principal for the Storage Integration from our tracking sheet, which is SnowflakePACInt1234 in our example.

The service principal should now have the desired access on the storage container. Be sure to do this for each container/account that you wish the Snowflake storage integration to access.

Testing

Now that we have created our storage integration, it would be wise to test it! The easiest way to test the functionality is to see if we can list the files within the Azure storage containers by leveraging a stage in Snowflake.

In Snowflake, we can create a stage that leverages our storage integration by executing a command with the following template:

create or replace stage <stage name> storage_integration = <storage integration name> url = 'azure://<storage account>.blob.core.windows.net/<storage container>/' ;

For our demonstration, we execute the following code to create two stages, which facilitates access to two different storage containers:

create or replace stage STG_SI_DEMO_STAGE_1 storage_integration = SI_AZURE url = 'azure://mystorageaccount.blob.core.windows.net/my-storage-container-1/' ; create or replace stage STG_SI_DEMO_STAGE_2 storage_integration = SI_AZURE url = 'azure://mystorageaccount.blob.core.windows.net/my-storage-container-2/' ;

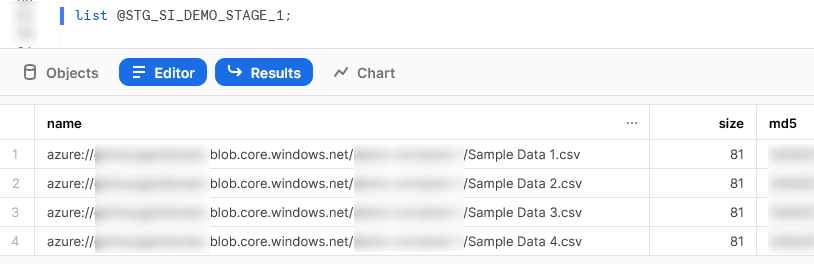

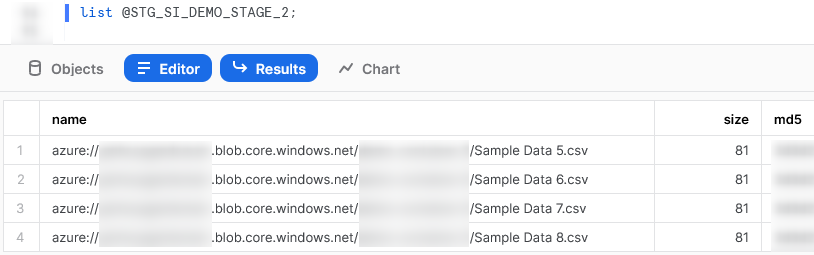

Now that we have created our stages, we can use the LIST command to test the access. The following two screenshots demonstrate the outputs of our list commands.

Our first stage has access to four sample data CSVs:

Our second stage has access to another four sample data CSVs:

Wrap Up

In this article we have demonstrated how to create a Storage Integration object in Snowflake that facilitates access to multiple storage containers in Azure. The best part of this is that engineers no longer need to figure out the authentication when creating stages in Snowflake to interact with these containers.