This series takes you from zero to hero with the latest and greatest cloud data warehousing platform, Snowflake.

One of the fantastic features in Snowflake is the support of zero-copy cloning. This is the capability to duplicate an object while neither creating a physical copy nor adding any additional storage costs. This allows for much greater freedom in the environment as costs are kept low without limiting potential.

To quote Snowflake’s documentation directly:

For databases, schemas, and tables, a clone does not contribute to the overall data storage for the object until operations are performed on the clone that modify existing data or add new data, such as:

– Creating a new, populated table in a cloned schema.

– Adding, deleting, or modifying rows in a cloned table.

Why Clone an Object?

There are plenty of reasons to clone an object not only in Snowflake but also in any data warehouse. Usually in the wild, I see most cloning taking place for one of the following three reasons:

- To support different environments such as development, test, backup, etc.

- To experiment with potential modifications/development without creating a new environment, whilst removing risk to the source object(s)

- To achieve a one-off task that benefits from its own source object(s)

Of course, this is not an exhaustive list of scenarios when a clone would be beneficial.

Which Objects Can Be Cloned?

Before we discuss how to clone an object, it is worth considering what objects can be cloned and any caveats that may exist. To begin, below is a list of all objects that can be cloned at the time of writing. An updated list can be retrieved from Snowflake’s Documentation on Cloning:

- Data Containment Objects

- Databases

- Schemas

- Tables

- Streams

- Data Configuration and Transformation Objects

- Stages

- File Formats

- Sequences

- Tasks

As you can see, I have divided these objects into two groups: Data Containment Objects and Data Configuration and Transformation Objects. This is due to how the cloning functionality varies for objects in each group.

Also, note that only permanent and transient tables support time travel with cloning (discussed below). Temporary tables can be cloned within a session, but time travel is not supported.

Cloning an Object

To clone an object, a single SQL statement is required:

CREATE <object_type> <object_name> CLONE <source_object_name>

This statement will create a new object by cloning an existing object. The above is a simplified version of the statement; the statement below contains the full syntax:

CREATE [ OR REPLACE ] { DATABASE | SCHEMA | TABLE | STREAM | STAGE | FILE FORMAT | SEQUENCE | TASK } [ IF NOT EXISTS ] <object_name>

CLONE <source_object_name>

It is important to keep in mind that the clone is still a brand-new object. Whilst the metadata and data will be the same as the source object, the new clone will have its own history as far as time travel and data loading are concerned.

Cloning with Time Travel

Another great feature in Snowflake is the Time Travel functionality, which is how Snowflake stores previous versions of an object for a set period of time and allows users to retrieve these versions at will (check out this detailed blog post on the Time Travel feature). For the objects in the Data Containment Objects group above, time travel can be leveraged during cloning. This results in clones of the objects as they existed at the required time, instead of how they currently exist.

This is achieved using the AT or BEFORE clauses, which supports specific timestamps, offsets from the current time or statement IDs to use as a reference. In each of these scenarios, Snowflake will return the state of the object as it existed directly at/before that timestamp, or directly following/before the completion of the statement with the corresponding statement ID.

This is achieved using the following SQL syntax:

CREATE [ OR REPLACE ] { DATABASE | SCHEMA | TABLE | STREAM } [ IF NOT EXISTS ] <object_name>

CLONE <source_object_name>

[ { AT | BEFORE } ( { TIMESTAMP => <timestamp> | OFFSET => <time_difference> | STATEMENT => <id> } ) ]

For example, we could clone a table called MY_SOURCE_TABLE into a new table called MY_JAN20_TABLE specifically for January 2020 by cloning the table as it existed before midnight on 1st February 2020:

CREATE TABLE MY_JAN20_TABLE

CLONE MY_SOURCE_TABLE

BEFORE (TIMESTAMP => TO_TIMESTAMP_TZ('01/02/2020 00:00:00', 'dd/mm/yyyy'))

Similarly, we could create a table called MY_OFFSET_TABLE containing data from 30 minutes ago using the OFFSET input, where the OFFSET input is provided in seconds:

CREATE TABLE MY_OFFSET_TABLE

CLONE MY_SOURCE_TABLE

BEFORE (OFFSET => -60 * 30)

When cloning using time travel, there are four key points to note:

- An error is returned if the source object did not exist at the time/point specified in the

ATorBEFOREclause. - Child objects that did not exist at the time/point specified in the

ATorBEFOREclause will simply not be cloned. This will not return any errors. - An error is returned if any of the objects required to clone the object have not maintained historical data. That is to say, the data retention time for the object does not include the time/point specified in the

ATorBEFOREclause. - The time travel data retention maintains both data and metadata, which means a standard time travel query can retrieve historic names of columns and similar metadata. A clone on the other hand retrieves the data from the historical store, however the metadata is retrieved the current state of the source object. For example if a column has been renamed in a table, the data in the table will retrieved based on the time/point specified in the

ATorBEFOREclause whilst the column names will be retrieved from the current state.

Child Objects in a Clone

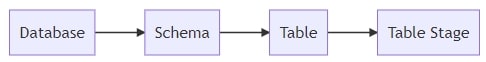

Some objects in Snowflake contain child objects, such as a database containing schemas, which contain tables, which contain table stages:

When an object is cloned, its children will usually be cloned as well. The exceptions to this rule are:

- External Tables

- Internal Named Stages

These should not be confused with internal table stages, which are cloned alongside their parent table.

A Critical Note When Child Objects Are Cloned

When any form of internal stage is cloned (usually in the case of an internal table stage), it is vital to note that this stage will be empty. On a similar thread, cloned streams will also be empty and cloned pipes and tasks will by paused by default.

Cloning Objects with Child Pipes

One possible child for an object would be a pipe. For example, a schema may include a pipe that loads data into one of its tables. Whether or not a child pipe is cloned along with its parent depends on the pipe itself. Specifically, this depends on whether the pipe references internal named stages. Recall that internal named stages are not cloned. This extends to pipes that use internal named stages. However, if a pipe references external stages then it will be cloned.

When a pipe is cloned, by default it will be paused. This is to avoid unnecessary data loading as a clone may not require additional data loading. This also allows the user to confirm the pipe will execute the correct action. This is especially important when the pipe specifies either the full namespace or the schema within its definition. This will be maintained and could potentially result in data being loaded into the wrong location.

For example, assume a pipe is defined as follows:

CREATE PIPE "PIPE_A" AS COPY INTO "DATABASE_A"."SCHEMA_A"."TABLE" FROM @"DATABASE_A"."SCHEMA_A"."STAGE"

Regardless of how PIPE_A is cloned, its defining statement is clear and rigid. It will always load data from a specific stage and load it into a specific table.

Now, assume another pipe is defined as follows:

USE DATABASE "DATABASE_A"; CREATE PIPE "PIPE_B" AS COPY INTO "SCHEMA_A"."TABLE" FROM @"SCHEMA_A"."STAGE";

If PIPE_B is cloned, its new functionality will be determined by exactly how it was cloned. If the clone exists within the same database, say DATABASE_A, the cloned pipe will perform exactly the same functionality as the original and still load data into "DATABASE_A"."SCHEMA_A"."TABLE".

Wrap Up

So there we have it—an overview of cloning and some of the nuances across objects. Whilst we have not worked towards a specific target in this post, I hope it has lifted the curtain on some of the finer details of cloning. If you have any specific questions on this or want to offer up your own use cases for cloning, I’d love to read about it in a comment below. Thanks for reading!