To ingest data stored in AWS S3 into Snowflake, you can use either direct COPY INTO statements or a Snowpipe for automation. Both options require a Snowflake object called an external stage to connect Snowflake to AWS. It’s recommended to use Storage Integrations for authentication instead of directly including credentials in the stage configuration. This way, access to S3 Buckets can be restricted at the integration level and users can only create stages connecting to authorised S3 Buckets through the integration, preventing unauthorised access to other buckets.

Check out the related “Configuring Storage Integrations Between Snowflake and Azure Storage” blog post from Chris Hastie for more on Storage Integrations.

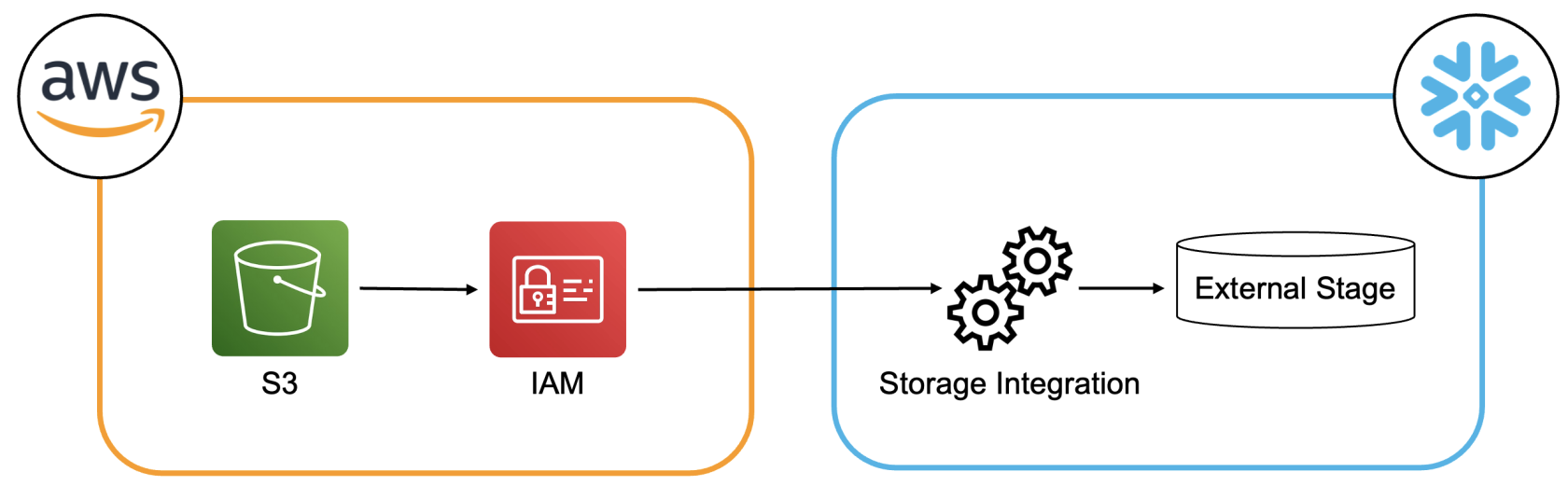

Flow Diagram

This diagram gives an illustration of the components involved for configuring a storage integration between Snowflake and AWS.

Requirements

This article assumes that the following objects are already configured:

- An AWS S3 bucket

- A Snowflake user with ACCOUNTADMIN role or another role with granted CREATE INTEGRATION privileges

Step 1: Create an AWS IAM ROLE

The AWS IAM role should only have the necessary privileges for the intended purpose, and not any additional ones. For guidance on defining these privileges, refer to Snowflake’s documentation.

For the purpose of this demo an AWS managed policy for Amazon S3 called AmazonS3FullAccess is used.

The next GIF illustrates the creation of an IAM role called snowflake-s3-role with authorization from an other AWS account to carry out actions using a specified external ID. The current Account ID can be used as the AWS account ID for now and a placeholder such as “0000” can be temporarily entered as the external ID. These values can be later adjusted after creating a storage integration in Snowflake.

Step 2: Create a Storage Integration

Next, we can proceed to Snowflake to create the storage integration. It is important to note that an ACCOUNTADMIN is required for this task. A storage integration in Snowflake is an object that stores a generated identity for the external cloud storage.

The following values should be specified when creating the storage integration:

- storage_aws_role_arn: The ARN of the role created in step 1 is required.

- storage_allowed_locations: The location that the storage integration is allowed to access should be specified here. It’s important to note that this location must be included in the policy attached to the AWS role. In this case, we have attached the policy AmazonS3FullAccess, which grants full access to Amazon S3.

- Enabled = true: Specifies whether the storage integration can be used in stages.

To create the storage integration, run the following SQL statement with your own values replacing the placeholders <>:

create storage integration <integration_name>

type = external_stage

storage_provider = s3

storage_aws_role_arn = '<role ARN>'

enabled = true

storage_allowed_locations = ('s3://<bucket_name>/');

Step 3: Create a Trust Relationship for the Storage Integration

Afterward, we can execute the following query to retrieve information about the storage integration:

desc integration <integration_name>;

This will return 7 rows of information. From this, we need to copy the values of STORAGE_AWS_IAM_USER_ARN and STORAGE_AWS_EXTERNAL_ID. These two values should then be used to replace the corresponding placeholders from step 1.

To do this, select the IAM role in AWS and navigate to Trust relationships. Then, click on Edit trust policy. Replace the values of “AWS” and “sts:ExternalId” with the two IDs obtained from the previous query.

The GIF below shows the process for granting access to the S3 storage integration.

Step 4: Create an External Stage

With the above setup, we can now create an external stage using the following SQL statement:

create stage <stage_name> storage_integration = <integration_name> url = 's3:// <bucket_name>/';

To approve the connection, you can drop any file into the S3 bucket and then run the following SQL statement in Snowflake:

List @<stage_name>;

The query should list all existing files in the S3 Bucket.

Wrap Up

In this article, we showed how to create a Storage Integration in Snowflake that provides access to all S3 Buckets in an AWS account (with the option to restrict access). The advantage of this is that engineers no longer need to handle authentication when creating Snowflake stages to interact with S3 Buckets.