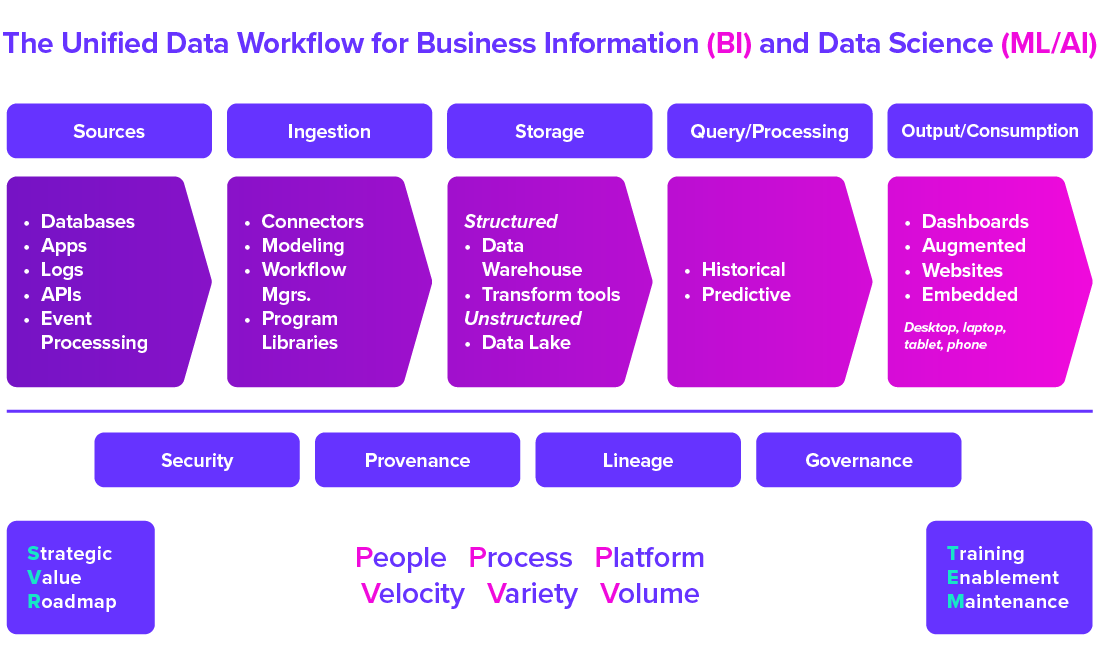

A system-wide data workflow for an analytical business information system is a high-level map of a BI System. Summarizing data movement from data sources through the ELT processes and, finally, the presentation layer.

Figure 3.3.1 The Unified Data Workflow

The figure highlights broad categories of sources, activities, perspectives and methods you will use to extract data from myriad data sources and land the data in a database containing unstructured and structured data. We refer to structured data in a database as a data warehouse. Unstructured data in a database is a data lake. Of course, your IT staff or consultants must create the extract, load and transform processes. They must also develop the database design (schema), so your consulting partner and analysts can build interactive dashboards.

Later, you may apply machine learning algorithms and artificial intelligence (ML/AI) for predictive analytics. Some of that data will come from your data warehouse, but others will come from sources not part of your database. The combination of these sources may be in your data lake or from other data sources moved into a dashboard. The query/processing heading summarizes these activities.

Most of your users will interact with the data using dashboards or augmented through search tools in the output and consumption layer.

You must develop an understanding of operational data workflows during the discovery work you conduct while developing a Strategic Vision Roadmap (SVR). Your aim in the SVR is to document current and anticipated business data needs, identify the right cloud platform and software, and present your understanding of data consumption patterns, processing speed requirements and frequency (Velocity/Variety/Volume).

To maximize value from your BI investments, you must provide initial and ongoing training for your team while performing regular system maintenance to ensure that your BI Systems continue performing as fast and efficiently as possible.

Preventing unauthorized access is essential for protecting proprietary data. You must balance this requirement while making it easy for end users to find what they need (governance) and know where the data comes from (provenance/lineage).

Ad Hoc Environment Requirements

More than 50% of your data will reside in an environment that doesn’t require a formalized approval process before publication. These unaudited data workflows are built using established data sources or sources of minimal use in the organization. Some data will be of temporary value. For example, a common need is understanding why profits decline on a particular product line. Is the impact temporary or permanent? Ad hoc analysis seeks to uncover these details. In mature BI environments, it is common to see many dashboards that are of temporary (ad hoc) value. Many of these transient analysis are critical for identifying cause and effect.

Applying a formalized, time-consuming approval process to this ad hoc data is unnecessary (wasteful). It may cause people to bypass established auditing processes if control is heavy-handed. Allow this ad hoc analysis, discovery and reporting to be published without a formal review. Evidence of a large volume of this activity is a clear sign that your employees are getting value from the BI System.

As long as the publisher of the ad hoc information is transparent about the sources, lineage, provenance and explains the steps taken to maximize quality, and describes potential errors and omissions, this is a perfectly acceptable practice. Would you require a formal process for vetting every spreadsheet your employees produce? Of course not.

Design your data access procedures to allow analysts to quickly iterate many designs without requiring formal auditing. The temporary and limited value of this analysis precludes the need. The people using these reports should be aware of the reports’ origins and limitations and knowledgeable in the subject areas.

Ad hoc reports can attain mass adoption. Anticipate this and define an audit process for bringing widespread ad hoc reports to production-level certification without interrupting usage while the audit is underway.

Mission-Critical Reporting and Analysis

Your mission-critical data should go through formal audit and approval processes. These processes are time-consuming and require knowledgeable, scarce resources. The added cost is associated with auditing, documentation and routing for sign-off from the appropriate leadership. Reserve this quality assurance step for mission-critical reporting or information with regulatory or legal requirements to audit.

Strike a balance that makes it easy for your team to conduct ad hoc analysis, but do it in a manner that encourages compliance with reasonable safeguards. You can accomplish these goals without sacrificing quality or security.

In the next post, I will provide additional tips for improving governance and security.