This series shows you the various ways you can use Python within Snowflake.

Recently, Snowflake released external access integrations into general availability. This allows Snowflake admins to create secure tunnels for Snowflake to communicate with the outside world and can therefore communicate with external APIs to complete all manner of tasks. For example, external access could be used for any of the following, among many others:

- Send alerts to Teams webhooks so that the alerts can be presented in a Teams channel

- Send alerts to Slack webhooks so that the alerts can be presented in a Slack channel

- Communicate with a Matillion ETL API to trigger jobs

- Communicate with the Matillion DPC API to trigger pipelines (this post)

- Communicate with a Tableau Server/Cloud API to trigger datasource extract refreshes

- Communicate with any other external API for any other reason, the opportunities are limitless!

This specific article focuses on how to communicate with a Matillion DPC API to trigger jobs; however, this series will include the other examples above, as well. With each new article, the entry above will be converted to a link.

How Does External Access in Snowflake Work?

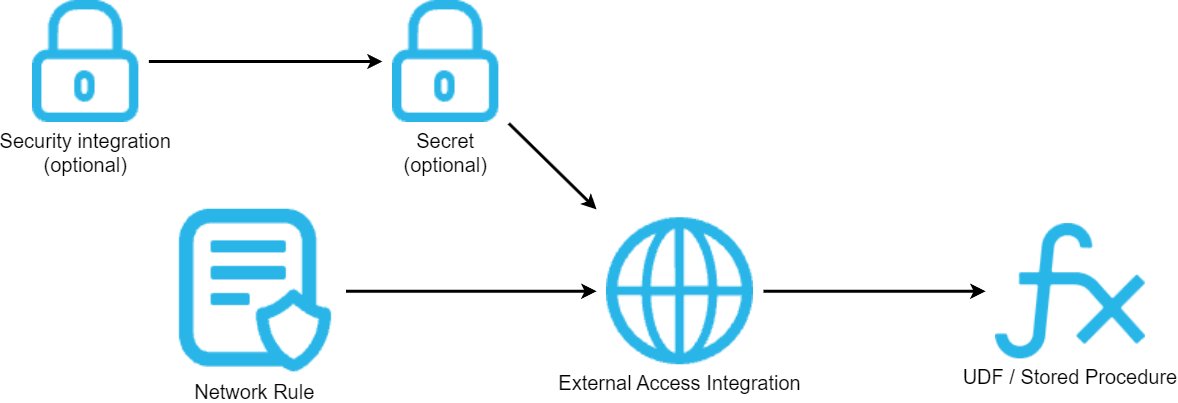

Before we dive into the specific example, let’s cover the architecture involved for external access in Snowflake. There are four main components:

- A network rule that controls where traffic is allowed to travel to

- An external access integration that allows Snowflake objects to leverage the network rule

- An optional security component to facilitate authentication. This usually leverages a Snowflake Secret object that can either securely store information such as a username and password, or make use of a related Security Integration object that facilitates OAuth authentication with an API endpoint.

- A Snowflake object such as a stored procedure or a user defined function (UDF) that leverages the external access integration

This is demonstrated in the following diagram:

How to Configure an Outbound Network Rule

In short, network rules are database objects that store information on a type of network traffic for Snowflake. This traffic can be one of two categories:

- Ingress – Traffic from outside of Snowflake that is coming in to Snowflake

- Egress – Traffic that is leaving Snowflake and travelling outside

This article specifically focuses on the egress category, and how we can leverage this to enable Snowflake to communicate with endpoints outside of Snowflake’s network. Note that in Snowflake’s terminology, the category (ingress/egress) is referred to as the “mode,” and this terminology will be used for the rest of this article.

Example Network Rule for External Access

Here is a simple example for creating an outbound/egress network rule:

create network rule "MY_OUTBOUND_NETWORK_RULE"

type = HOST_PORT -- Always use this value for external access integrations

mode = EGRESS -- Always use this value for external access integrations

value_list = ('?????') -- Populate with host(s) of the target endpoint(s), such as matillion.customer.com, host.online.tableau.com, customer.webhook.office.com, eu1.api.matillion.com, etc

comment = 'Allows traffic to be sent to the host(s) in the value_list'

;

This template can be used to configure the network rule for any external access.

It is important to note that Snowflake stores network rules as database objects, meaning they sit inside schemas in databases. This is different to network policies, which are account-level objects. This is easy to get confused, which is why it has been mentioned here.

Specific Network Rule Example

The following network rule could be leveraged to enable outbound/egress traffic with the Matillion DPC API:

create network rule "EXTERNAL_ACCESS"."NETWORK_RULES"."EGRESS__MATILLION_DPC_API"

type = HOST_PORT

mode = EGRESS

value_list = (

'eu1.api.matillion.com' -- The host for Matillion DPC requests to accounts in the EU1 region

, 'us1.api.matillion.com' -- The host for Matillion DPC requests to accounts in the US1 region

)

comment = 'Allows traffic to be sent to the API for the Matillion Data Productivity Cloud (DPC)'

;

How to Set Up an External Access Integration

In short, external access integrations are account-level objects that act as the bridge between egress network rules and any other Snowflake objects (stored procedures/UDFs).

Here is a simple example for creating an external access integration that leverages an outbound/egress network rule:

create external access integration "MY_EXTERNAL_ACCESS_INTEGRATION"

allowed_network_rules = ("MY_DATABASE"."MY_SCHEMA"."MY_OUTBOUND_NETWORK_RULE")

enabled = FALSE

comment = 'External access integration to support traffic sent to the <endpoint(s) enabled by the network rule(s)>'

;

How to Attach Optional Security Components: OAuth for Matillion DPC API

Depending on your required target, you may have a need for additional security components to authenticate your requests with the destination. For example, the destination may expect authentication via a username/password combination that has been converted into a bearer token, or it may leverage OAuth and expect a combination of client ID and secret ID. Of course, these are just two examples and there are other possibilities for more complex use cases.

In the case of the Matillion DPC API, both a security integration and a secret are required. The security integration will leverage the client ID and secret ID that can be generated by an Admin-level user on the Matillion Hub, and the secret will leverage that security integration to generate an access token.

My personal recommendation is to create your external access integration before creating any additional security components, then apply them using alter commands.

To start, create the external access integration using the network rule that was defined in the specific example earlier in this article. As the integration is still a work in progress, set “enabled” to FALSE.

create external access integration "EAI__MATILLION_DPC_API"

allowed_network_rules = ("EXTERNAL_ACCESS"."NETWORK_RULES"."EGRESS__MATILLION_DPC_API")

enabled = FALSE

comment = 'External access integration to support traffic sent to the API for the Data Productivity Cloud (DPC)'

;

How to Generate a Client ID and Secret ID in Matillion Hub

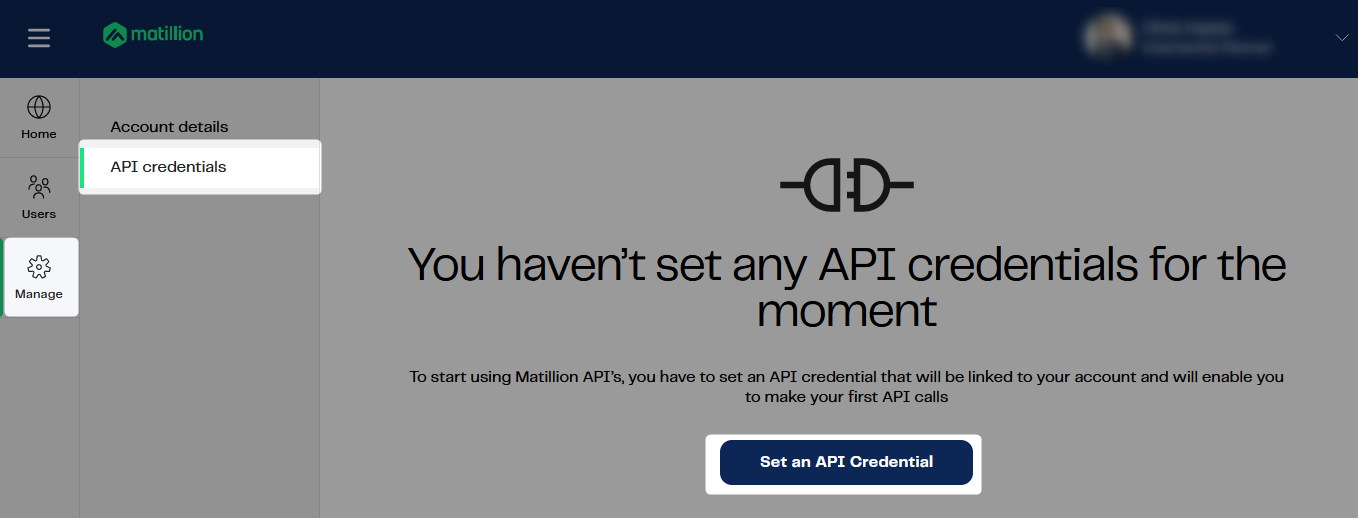

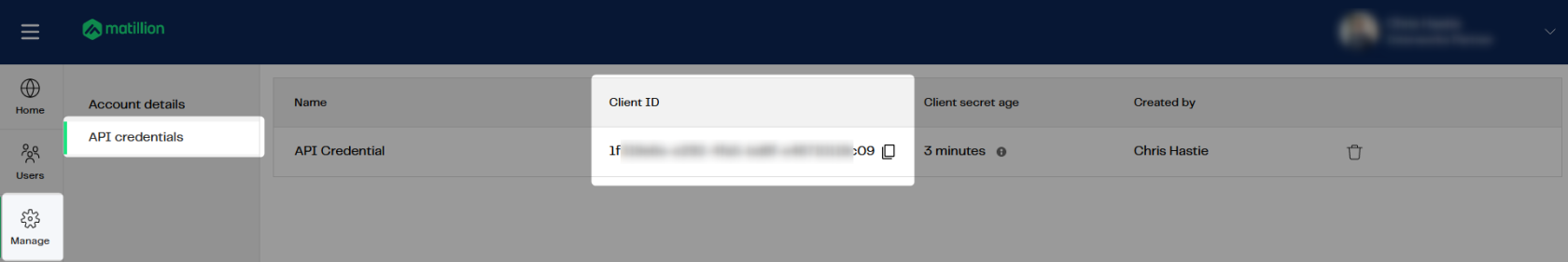

When an Admin-level user navigates the Matillion Hub, they are able to access the “API credentials” pane of the “Manage” area and create an API credential.

The secret ID will only be displayed once. This is the only opportunity to copy it and securely store it elsewhere.

Once created, the client ID can be copied from the same area.

At time of writing, only a single API credential can be created for each Matillion Hub account.

How to Configure a Security Integration for OAuth Access to the Matillion DPC API

Now that the combination of client ID and secret ID has been retrieved, they can be placed into a security integration in Snowflake. Here is an example statement for creating a security integration in Snowflake that is intended for authenticating with the Matillion DPC API:

create security integration "OAUTH__MATILLION_DPC_API"

type = api_authentication

auth_type = oauth2

oauth_client_auth_method = client_secret_post

oauth_client_id = '<REDACTED>' -- Client ID for API credential

oauth_client_secret = '<REDACTED>' -- Secret ID for API credential

oauth_grant = 'CLIENT_CREDENTIALS'

oauth_token_endpoint = 'https://id.core.matillion.com/oauth/dpc/token' -- OAuth token endpoint for Matillion DPC API

oauth_allowed_scopes = ('pipeline-execution') -- Only available scope for Matillion DPC API at time of writing

enabled = TRUE

comment = 'Security integration to facilitate authentication to the API for the Matillion Data Productivity Cloud (DPC)'

;

It should be sufficient to copy this statement exactly, apart from changed the redacted client ID and secret ID values with the actual values for your API credential.

How to Configure a Secret to Leverage an OAuth Security Integration

Since the OAuth security integration has been configured, this step is very simple. The secret will simply act as a bridge between the security integration and the external access integration, and contains very little information itself.

create secret "EXTERNAL_ACCESS"."SECRETS"."CREDS__MATILLION_DPC_API" type = oauth2 api_authentication = "OAUTH__MATILLION_DPC_API" comment = 'OAuth secret to facilitate authentication to the API for the Matillion Data Productivity Cloud (DPC)' ;

Attach the Optional Security Components to the External Access Integration

Now that the security integration and secret have both been configured, attaching them to the external access integration is achieved with an alter statement. This is also a good opportunity to enable the integration.

alter external access integration "EAI__MATILLION_DPC_API"

set allowed_api_authentication_integrations = ("OAUTH__MATILLION_DPC_API")

, allowed_authentication_secrets = ("EXTERNAL_ACCESS"."SECRETS"."CREDS__MATILLION_DPC_API")

, enabled = TRUE

;

How to Trigger Matillion DPC Pipelines in a Stored Procedure

Now that we have discussed and configured the underlying architecture behind our process, we can move on to our true goal, which is to create a stored procedure in Snowflake that can trigger a Matillion DPC pipeline. In fact, we now have the tools we need to query any endpoint in the Matillion DPC API.

The specific endpoint we are interested in for this example is the following:

/v1/projects/{projectId}/pipeline-executions

This endpoint can be leveraged to trigger a pipeline execution, however it requires three important inputs:

- The ID for the project where the pipeline is contained

- The name of the pipeline

- The name of the environment in which the pipeline should be executed

It is also crucial that the pipeline has been published in Designer. We will tackle each of these in turn.

How to Publish a Pipeline

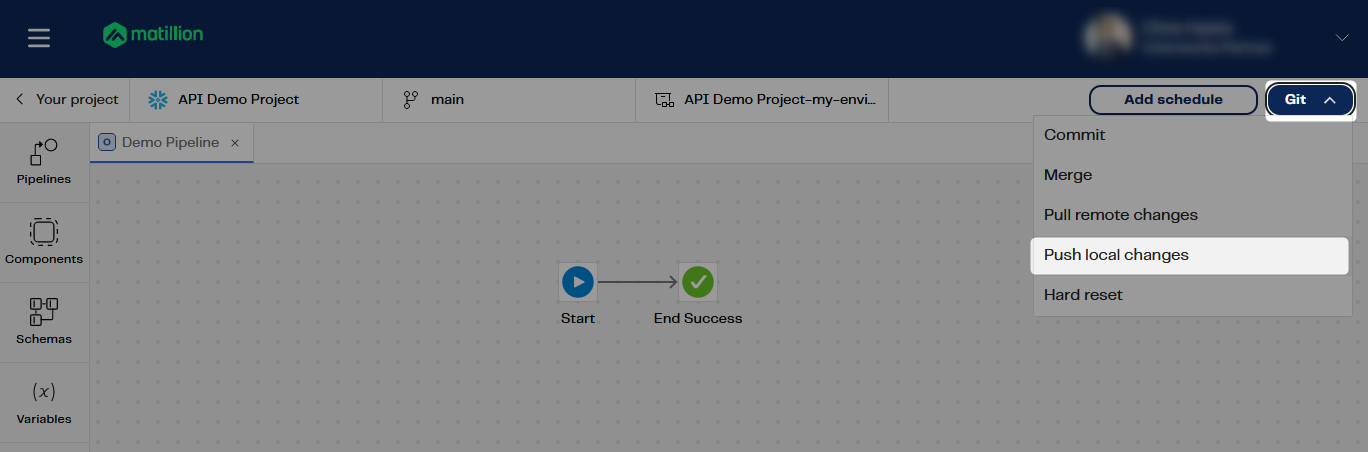

As you may know already, Designer is heavily reliant on an underlying Git integration, with every project existing as its own repository. Pipelines are developed inside of branches, and any local changes must be committed locally and then pushed to the remote before they can be scheduled; or before they can be targeting by an API request.

This is all controlled by the Git dropdown in the upper right corner of the Designer canvas when you are looking at your job:

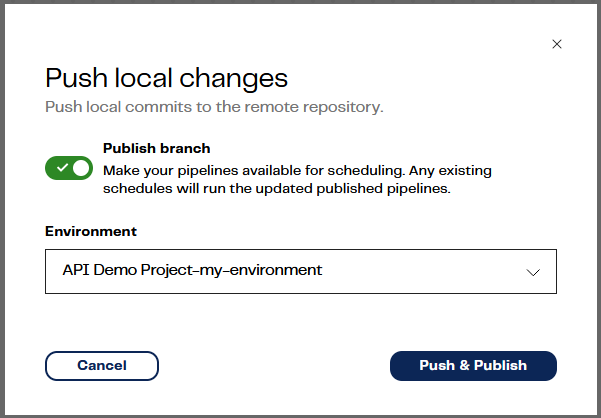

When pushing your changes, be sure to publish the branch!

Matillion will then notify you of the successful push:

Where to Find the Project ID

Finding the project ID is relatively simple, and there are multiple ways to do it. A more complicated way is to use the projects endpoint in the API, though we will use a simpler method. Once you have opened a project in Designer, you will actually see the project ID at the start of your URL just after your account’s host address. Depending on where you are in the UI, this may be after a `/project/ clause, too.

Here are two examples:

https://my-hub-account.projects.eu1.matillion.com/a1bcde2f-34ab-5678-cd90-12345e6f78a9/ https://my-hub-account.projects.eu1.matillion.com/project/a1bcde2f-34ab-5678-cd90-12345e6f78a9/

In both of these examples, the project ID is a1bcde2f-34ab-5678-cd90-12345e6f78a9.

Pipeline Name and Environment

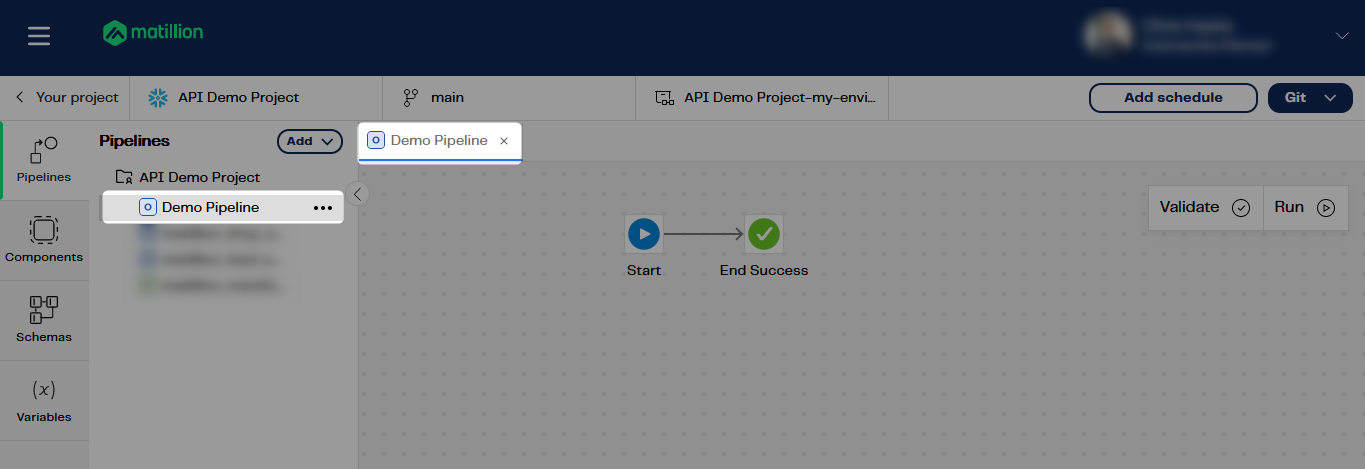

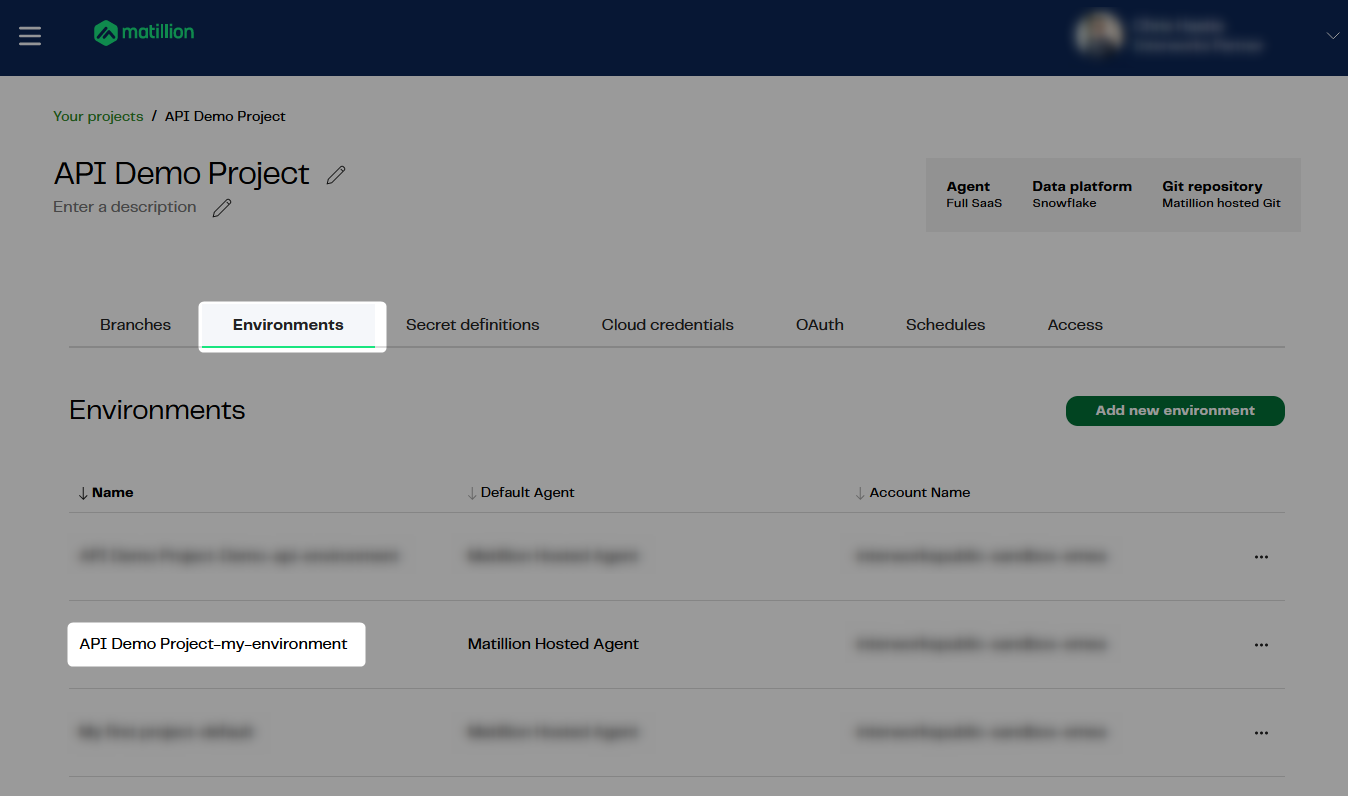

The pipeline name and environment should be simply to find. The name will match what you have called your pipeline, such as “Demo Pipeline” in this example:

Meanwhile, the environment name is whatever you called the environment that you wish to use, such as “API Demo Project-my-environment” in this example. Note that environments are always prepended with the project name:

Creating a Stored Procedure to Trigger a Matillion DPC Pipeline

Finally, we come to the main meat of the article. First, I will provide the full code for the stored procedure. I will then explain some key areas.

This article does not explain Python stored procedures in general. If you wish to know more about these, the following article is recommended: A Definitive Guide to Python Stored Procedures in the Snowflake UI. This is part of our Snowflake with Python series.

The full procedure definition is as follows:

-- Create stored procedure

create or replace procedure "EXTERNAL_ACCESS"."PROCEDURES"."API__MATILLION_DPC__TRIGGER_PIPELINE"(

PROJECT_ID varchar

, PIPELINE_NAME varchar

, ENVIRONMENT_NAME varchar

, CONNECTION_STRING varchar default 'eu1.api.matillion.com/dpc' -- Populate with host endpoint of the target Matillion DPC region, such as eu1.api.matillion.com/dpc or us1.api.matillion.com/dpc

)

copy grants

returns variant

language python

runtime_version = 3.10

handler = 'main'

external_access_integrations = ("EAI__MATILLION_DPC_API")

secrets = ('cred' = "EXTERNAL_ACCESS"."SECRETS"."CREDS__MATILLION_DPC_API")

packages = ('snowflake-snowpark-python', 'requests')

as

$$

## Import module for inbound Snowflake session

from snowflake.snowpark import Session as snowparkSession

## Imports

import _snowflake

import requests

import json

## Define main function

## Put at top of SProc to avoid Snowflake linting bug

## if converted to a Snowpark for Python worksheet

def main(

snowpark_session: snowparkSession

, project_id: str

, pipeline_name: str

, environment_name: str

, connection_string: str

):

### Validate inputs

if project_id is None \

or len(project_id) == 0 \

or pipeline_name is None \

or len(pipeline_name) == 0 \

or environment_name is None \

or len(environment_name) == 0 \

:

return "Missing inputs. Must provide all of project_id, pipeline_name, environment_name"

### Generate access token using OAuth secret

access_token = _snowflake.get_oauth_access_token("cred")

### Generate URL to trigger the pipeline

api_endpoint_url = f"https://{connection_string}/v1/projects/{project_id}/pipeline-executions"

### Populate the payload for the API call

api_payload = {

"pipelineName": pipeline_name

, "environmentName": environment_name

}

### Populate headers for the API call

headers = {

"Content-Type": "application/json"

, "Authorization": f"Bearer {access_token}"

}

### Execute API call to trigger job

response = requests.request("POST", api_endpoint_url, headers=headers, json=api_payload)

try:

if response.status_code == 200 :

return response.json()

else :

return response.json()

except Exception:

return response

$$

;

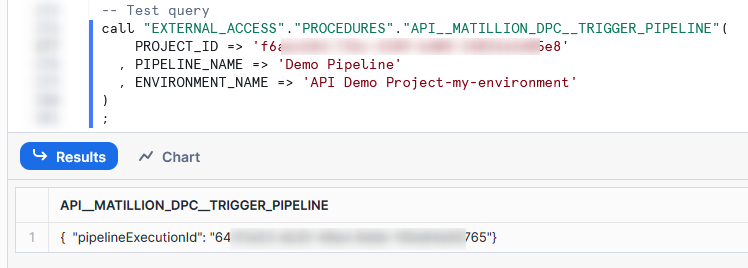

-- Test query

call "EXTERNAL_ACCESS"."PROCEDURES"."API__MATILLION_DPC__TRIGGER_PIPELINE"(

PROJECT_ID => 'a1bcde2f-34ab-5678-cd90-12345e6f78a9'

, PIPELINE_NAME => 'Demo Pipeline'

, ENVIRONMENT_NAME => 'API Demo Project-my-environment'

)

;

The following lines are important here:

- Lines 13 and 14 enable the stored procedure to leverage the external access integration and accompanying secret that facilitate authentication to the Matillion DPC API.

- Lines 23 and 49 are required to leverage the secret to generate an access token for the Matillion DPC API. That access token is then leveraged for authorization as part of the header on line 63.

- Line 52 leverages an input variable for project ID to establish the full API endpoint that the request is sent to.

- Lines 55-58 establish the payload that is sent to the API, consisting of the pipeline name and environment name that have been provided as input variables.

- Line 67 sends the POST request itself to the Matillion DPC API, combining all of the elements established so far.

- Lines 69-75 return the response from the request with some minor error handling.

- Lines 81-86 can be used to test the process.

Testing the Stored Procedure

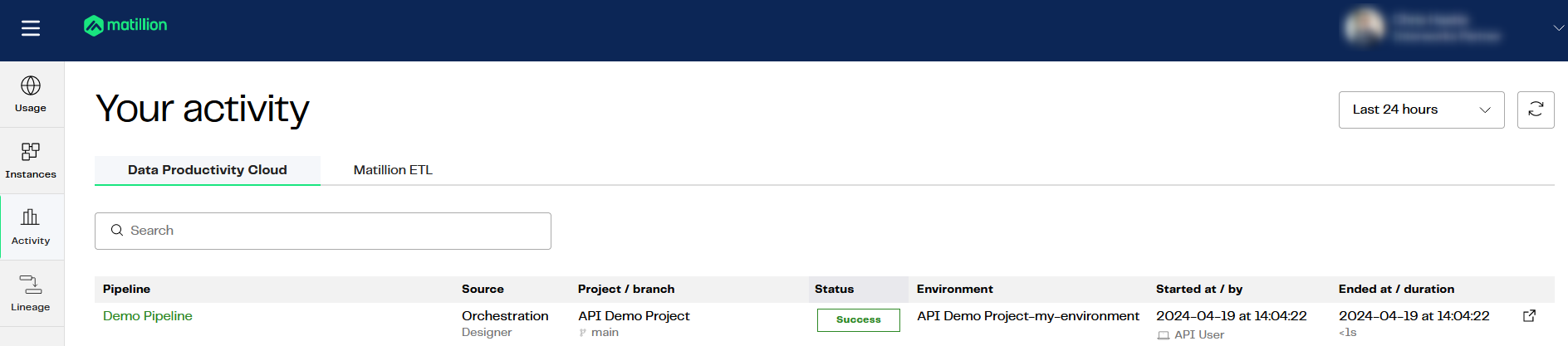

A successful test of the stored procedure should yield a response that includes a pipelineExecutionId, which would be the ID for the execution that has just been triggered.

Once triggered, you should be able to monitor the execution in the task history area of the Matillion Hub. You can also track the status by sending requests to the relevant API endpoint.

Wrap Up

Hopefully the information in this article provides you with all of the tools you need to establish your own connections from Snowflake to the Matillion DPC API. This stored procedure can now be made available to other users using Snowflake’s Role-Based Access Control (RBAC) and could be useful in multiple scenarios, including:

- Triggering a pipeline as part of a scheduled task

- Triggering a pipeline as part of a wider set of stored procedures

- Triggering a pipeline when a stream detects new data from a snowpipe