Recently, Snowflake released external access integrations into general availability. This allows Snowflake admins to create secure tunnels for Snowflake to communicate with the outside world, and can therefore communicate with external APIs to complete all manner of tasks. For example, external access could be used for any of the following, among many others:

- Send alerts to Teams webhooks so that the alerts can be presented in a Teams channel

- Send alerts to Slack webhooks so that the alerts can be presented in a Slack channel

- Communicate with a Matillion ETL API to trigger jobs

- Communicate with the Matillion DPC API to trigger pipelines

- Communicate with a Tableau Server/Cloud API to trigger datasource extract refreshes

- Retrieve data from an API, for example the Snowflake Status API (this post)

- Communicate with any other external API for any other reason, the opportunities are limitless!

This specific article focuses on how to retrieve the latest status summary from the Snowflake Status API as a table.

How Does External Access in Snowflake Work?

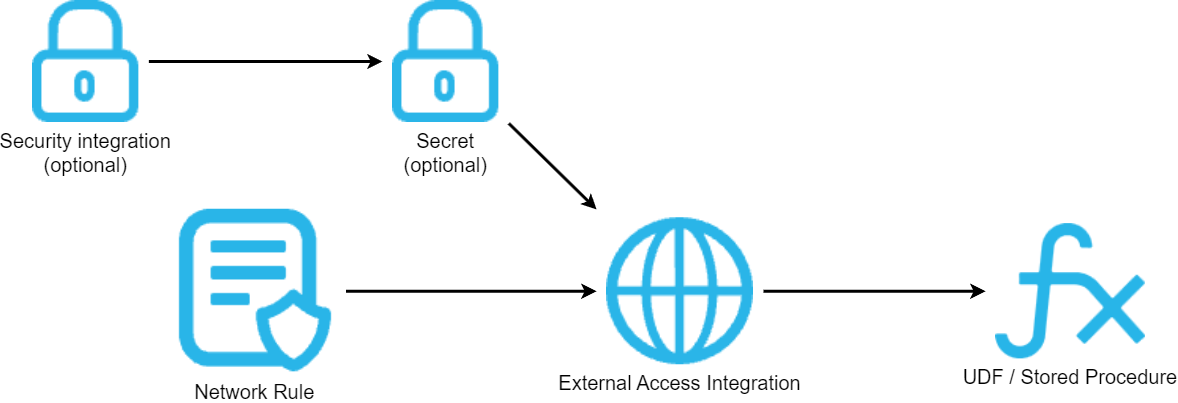

Before we dive into the specific example, let’s cover the architecture involved for external access in Snowflake. There are four main components:

- A network rule that controls where traffic is allowed to travel to.

- An external access integration that allows Snowflake objects to leverage the network rule.

- An optional security component to facilitate authentication. This usually leverages a Snowflake Secret object that can either securely store information such as a username and password, or make use of a related Security Integration object that facilitates OAuth authentication with an API endpoint.

- A Snowflake object such as a stored procedure or a user defined function (UDF) that leverages the external access integration.

This is demonstrated in the following diagram:

How to Configure an Outbound Network Rule

In short, network rules are database objects that store information on a type of network traffic for Snowflake. This traffic can be one of two categories:

- Ingress – Traffic from outside of Snowflake that is coming in to Snowflake

- Egress – Traffic that is leaving Snowflake and travelling outside

This article specifically focuses on the egress category, and how we can leverage this to enable Snowflake to communicate with endpoints outside of Snowflake’s network. Note that in Snowflake’s terminology, the category (ingress/egress) is referred to as the “mode,” and this terminology will be used for the rest of this article.

Example Network Rule for External Access

Here is a simple example for creating an outbound/egress network rule:

create network rule "MY_OUTBOUND_NETWORK_RULE"

type = HOST_PORT -- Always use this value for external access integrations

mode = EGRESS -- Always use this value for external access integrations

value_list = ('?????') -- Populate with host(s) of the target endpoint(s), such as matillion.customer.com, host.online.tableau.com, customer.webhook.office.com, eu1.api.matillion.com, etc

comment = 'Allows traffic to be sent to the host(s) in the value_list'

;

This template can be used to configure the network rule for any external access.

It is important to note that Snowflake stores network rules as database objects, meaning they sit inside schemas in databases. This is different to network policies, which are account-level objects. This is easy to get confused, which is why it has been mentioned here.

Specific Network Rule Example

The following network rule could be leveraged to enable outbound/egress traffic with the Snowflake Status API:

create network rule "EXTERNAL_ACCESS"."NETWORK_RULES"."EGRESS__SNOWFLAKE_STATUS_API"

type = HOST_PORT

mode = EGRESS

value_list = ('status.snowflake.com') -- The host for the Snowflake Status API

comment = 'Allows traffic to be sent to the Snowflake Status API'

;

How to Set Up an External Access Integration

In short, external access integrations are account-level objects that act as the bridge between egress network rules and any other Snowflake objects (stored procedures/UDFs).

Here is a simple example for creating an external access integration that leverages an outbound/egress network rule:

create external access integration "MY_EXTERNAL_ACCESS_INTEGRATION"

allowed_network_rules = ("MY_DATABASE"."MY_SCHEMA"."MY_OUTBOUND_NETWORK_RULE")

enabled = FALSE

comment = 'External access integration to support traffic sent to the <endpoint(s) enabled by the network rule(s)>'

;

Specific External Access Integration Example

In many cases, authentication is needed to interact with an API. This is demonstrated in many of our other external access articles — for example, leveraging an OAuth integration when connecting to Matillion DPC, or leveraging standard username and password authentication when connecting to Matillion ETL.

In this specific example however, no authentication is needed. The Snowflake Status API is open. We can therefore create the external access integration directly, with nothing else to configure:

create external access integration "EAI__SNOWFLAKE_STATUS_API"

allowed_network_rules = ("EXTERNAL_ACCESS"."NETWORK_RULES"."EGRESS__SNOWFLAKE_STATUS_API")

enabled = TRUE

comment = 'External access integration to support traffic sent to the Snowflake Status API'

;

How to Retrieve the Current Status Summary from the Snowflake Status API

Now that we have discussed and configured the underlying architecture behind our process, we can move on to our true goal, which is to create a User-Defined Table Function (UDTF) in Snowflake that can query the Snowflake Status API and return the current status summary.

A Quick Overview of the Snowflake Status API

Since we are only using this API for an example, we will not spend much time discussing the API itself. The most important information is that we will be querying this endpoint.

This endpoint returns the current status for each core component in Snowflake, and is similar to viewing the user-friendly equivalent page. If you like, open that endpoint now to see an example of the output. If Snowflake’s status is currently healthy, the output will be fairly dull. If Snowflake is encountering issues then the output gets more interesting.

Our purpose is to return the current status of every module within every cloud provider region for Snowflake, which is all contained in the “components” key.

Here is an example output, where we have only included the response for the region “AWS – US West (Oregon)”:

{

"page": {

"id": "94s7z8vpy1n8",

"name": "Snowflake",

"url": "https://status.snowflake.com",

"time_zone": "America/Los_Angeles",

"updated_at": "2024-06-11T22:26:37.674-07:00"

},

"components": [

{

"id": "l8zmwsrmltjd",

"name": "AWS - US West (Oregon)",

"status": "operational",

"created_at": "2019-01-02T15:16:31.219-08:00",

"updated_at": "2019-05-03T13:56:49.012-07:00",

"position": 1,

"description": null,

"showcase": false,

"start_date": null,

"group_id": null,

"page_id": "94s7z8vpy1n8",

"group": true,

"only_show_if_degraded": false,

"components": [

"pkrzt59vb1cm",

"b96r20d6p5g7",

"xjvzhg4llmqt",

"6jy082tyx85d"

]

},

{

"id": "pkrzt59vb1cm",

"name": "Snowflake Data Warehouse (Database)",

"status": "operational",

"created_at": "2019-01-15T09:18:30.171-08:00",

"updated_at": "2024-05-28T21:46:53.905-07:00",

"position": 3,

"description": null,

"showcase": false,

"start_date": null,

"group_id": "l8zmwsrmltjd",

"page_id": "94s7z8vpy1n8",

"group": false,

"only_show_if_degraded": false

},

{

"id": "b96r20d6p5g7",

"name": "Snowpipe (Data Ingestion)",

"status": "operational",

"created_at": "2019-01-30T00:40:49.592-08:00",

"updated_at": "2024-02-20T14:16:33.244-08:00",

"position": 4,

"description": null,

"showcase": false,

"start_date": null,

"group_id": "l8zmwsrmltjd",

"page_id": "94s7z8vpy1n8",

"group": false,

"only_show_if_degraded": false

},

{

"id": "xjvzhg4llmqt",

"name": "Replication",

"status": "operational",

"created_at": "2020-04-28T08:52:25.259-07:00",

"updated_at": "2024-02-20T14:16:33.256-08:00",

"position": 5,

"description": null,

"showcase": false,

"start_date": null,

"group_id": "l8zmwsrmltjd",

"page_id": "94s7z8vpy1n8",

"group": false,

"only_show_if_degraded": false

},

{

"id": "6jy082tyx85d",

"name": "Snowsight",

"status": "operational",

"created_at": "2021-08-06T09:12:25.424-07:00",

"updated_at": "2024-04-30T07:31:05.031-07:00",

"position": 6,

"description": null,

"showcase": false,

"start_date": "2021-08-06",

"group_id": "l8zmwsrmltjd",

"page_id": "94s7z8vpy1n8",

"group": false,

"only_show_if_degraded": false

}

]

}

A Quick Note on User-Defined Table Functions (UDTFs) in Snowflake

We are about to create a UDTF to return the current Snowflake status as a table. This article assumes familiarity with UDTFs, however the example itself is relatively simple anyway. If you wish for more information on UDTFs then the following articles are recommended:

- An Introduction to Python UDTFs in Snowflake

- A Definitive Guide to Creating Python UDTFs Directly within the Snowflake User Interface

Creating a UDTF to Return the Current Snowflake Status

Finally, we come to the main meat of the article. First, I will provide the full code for the UDTF. I will then explain some key areas.

This article does not explain Python stored procedures in general. If you wish to know more about these, the following articles are recommended: An Introduction to Python UDTFs in Snowflake, A Definitive Guide to Creating Python UDTFs Directly within the Snowflake User Interface. These are both part of our Snowflake with Python series.

The full UDTF definition is as follows:

-- Create UDTF

create or replace function "EXTERNAL_ACCESS"."FUNCTIONS"."API__SNOWFLAKE_STATUS__COMPONENTS"()

copy grants

returns table(

"ID" string

, "CLOUD_PROVIDER_REGION" string

, "COMPONENT" string

, "STATUS" string

, "CREATED_AT" timestamp_ntz

, "UPDATED_AT" timestamp_ntz

, "DESCRIPTION" string

, "POSITION_IN_GROUP" int

, "DATA_QUERIED_AT" timestamp_ntz

)

language python

runtime_version = 3.10

handler = 'main'

external_access_integrations = ("EAI__SNOWFLAKE_STATUS_API")

packages = ('requests', 'pandas')

as

$$

## Imports

import requests

import pandas as pd

## Define main class

class main:

### Define process method that acts

### on each individual input row.

### This method must be provided in all UDTFs

### but is not needed for our example as we

### are doing nothing at a row level.

def process(self) :

pass

### Define end_partition method that acts

### on full partition after rows are processed

def end_partition(self) :

### Retrieve the components from the endpoint

snowflake_components_json = retrieve_snowflake_summary_as_json()

### Process JSON into a Pandas DataFrame

df_snowflake_components = process_raw_components_data(snowflake_components_json)

### Output the result as tuples to match Snowflake UDTF requirements

return list(df_snowflake_components.itertuples(index=False, name=None))

## Define function to send the request to the summary endpoint

def retrieve_snowflake_summary_as_json():

### Define the endpoint URL

endpoint_url = r"https://status.snowflake.com/api/v2/summary.json"

### Populate headers for the API call

headers = {

"Content-Type": "application/json"

}

### Execute API call

response = requests.request("GET", endpoint_url, headers=headers)

### Only return something if the request is successful

if response.status_code == 200 :

return response.json()

else :

raise Exception(f"Error. Response code: {str(response.status_code)}")

## Define function to process JSON into a Pandas DataFrame

def process_raw_components_data(snowflake_components_json: dict):

### Load components into dataframe

df_all = pd.json_normalize(snowflake_components_json["components"])

df_all["data_queried_at"] = snowflake_components_json["page"]["updated_at"]

### Convert datetime fields.

### 1. Convert string to datetime

### 2. Remove timezone localization (output is now without timezone)

df_all["created_at"] = \

pd.to_datetime(df_all["created_at"], utc=True) \

.dt.tz_localize(None)

df_all["updated_at"] = \

pd.to_datetime(df_all["updated_at"], utc=True) \

.dt.tz_localize(None)

df_all["data_queried_at"] = \

pd.to_datetime(df_all["data_queried_at"], utc=True) \

.dt.tz_localize(None)

### Build a formatted dataframe for all cloud provider region groups

df_groups = df_all[df_all["group"] == True].copy()

df_groups["component"] = "All"

df_groups["cloud_provider_region"] = df_groups["name"]

df_groups["position_in_group"] = 1

### Determine group-level output

df_output_groups = df_groups[["id", "cloud_provider_region", "component", "status", "created_at", "updated_at", "description", "position_in_group", "data_queried_at"]]

### Build a formatted dataframe for all components

df_components = df_all[df_all["group"] == False].copy()

df_components["component"] = df_components["name"]

df_components["position_in_group"] = df_components["position"]

### Attach the cloud provider region to the component

df_components_merged = pd.merge(

left = df_components

, right = df_groups

, left_on = ["group_id"]

, right_on = ["id"]

, suffixes = ["", "_from_group"]

)

### Determine component-level output

df_output_components = df_components_merged[["id", "cloud_provider_region", "component", "status", "created_at", "updated_at", "description", "position_in_group", "data_queried_at"]]

### Build final output dataframe, combining both outputs

df_output = pd.concat([df_output_groups, df_output_components], axis=0)

df_output.sort_values(["cloud_provider_region", "position_in_group"], inplace=True)

return df_output

$$

;

-- Test query

select *

from table("EXTERNAL_ACCESS"."FUNCTIONS"."API__SNOWFLAKE_STATUS__COMPONENTS"())

;

The following lines are important here:

- Line 18 enables the UDTF to leverage the external access integration.

- Line 19 ensures that the relevant Python packages are available, which are then imported on lines 24-25 and leveraged throughout the function. Fortunately, these are all simple to ingest as they are already included in Anaconda’s Snowflake channel, which Snowflake leverages when executing Python.

- Lines 51-69 define a function that sends a request to the Snowflake Status API and returns the response JSON. This is then leveraged on line 43.

- Lines 71-123 convert the response JSON from the Snowflake Status API into a Pandas DataFrame, performing some light data engineering along the way. This is leveraged on line 46.

- Line 49 returns the final result as a list of tuples, as per Snowflake Python UDTF requirements. The output aligns with the columns define on lines 5-13.

- Lines 129-131 can be used to test the process.

This UDTF provides the main structure to achieve our goals, but only uses some basic error handling. Depending on your purposes, it may be worth expanding this when deploying it to your own environment.

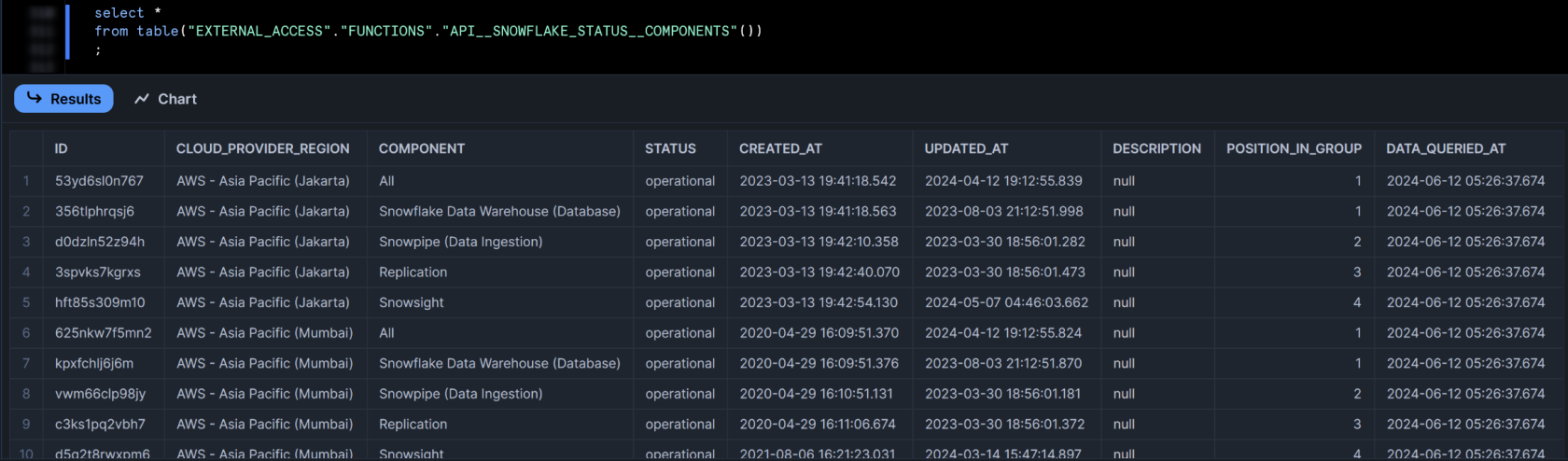

Testing the UDTF

A successful test of the UDTF should yield a table detailing every Snowflake. Also, quick bonus in this screenshot that we can see Snowsight’s new Dark Mode!

Wrap Up

Hopefully the information in this article provides you with all of the tools you need to build your own UDTFs that retrieve data from external APIs. Naturally, we’ve deliberately chosen a simpler example for this article and have therefore not tackled challenges such as paging, schema changes or authentication, however those are all challenges better faced by a standard Python/APIs article rather than an article about enabling external access from Snowflake.