After attending an in-depth technical training in Dallas, Texas, I thought it could be beneficial to give some examples of how Snowflake is unique in a series of blog posts moving forward. This week, I’m talking about JSON (although Snowflake’s functionality displayed in the article can be applied to most semi-structured data).

JSON is JavaScript Object Notation, and it is a minimal format for structuring data. JSON can be generated anywhere. Some common places JSON is generated are in Rest API calls or smart devices in response to an event or request. This creates a communication stream between a server and a web application, and it generally makes more sense to spit out a new JSON record than store the values in a relational database.

JSON is classified as semi-structured data, meaning exactly what the name implies: It’s structured … kind of. JSON is primarily composed of two primary parts, keys and values. These JSON files can quickly become a mess of complex arrays that are unreadable to the human eye. If you are unfamiliar with JSON arrays, I highly recommend reading this documentation from Squarespace that does a great job explaining them. Here’s a very basic example of a JSON array:

“employee” : {

“name” : “Holt Calder”,

“title” : “Data Engineering Intern” ,

“company” : “InterWorks, Inc.”,

“hobbies” : [“Golf”, “Skateboarding”, “Running”, “Travel”]

}

Here we have an employee attribute “table,” for lack of a better word. We also have the key attributes of Name, Title, Company and Hobbies, along with values for each key. Additionally, the hobbies key holds an array of values for all my hobbies, (I’m still searching for a strong skateboarding dataset for the next blog).

Now that we know a little bit about JSON, let’s investigate how Snowflake allows us to work with this semi-structured data in a familiar way using basic SQL syntax. I’m going to show you how to cast JSON to standard SQL data types, build a structured view, connect to Tableau and dominate the world with our newly transformed JSON data.

Using Weather Data as an Example

For this example, I worked with Python and the darksky.net API to gather some weather data. At InterWorks, a lot of the work we do is on-site, so traveling can quickly become a big part of the job. I grabbed data from a few places I have been fortunate enough to work in, as well as Stillwater, Oklahoma, where I’m based. I figured this could be fun data to look at, so I’m not as disappointed running in the 100° heat after work. Also, I was genuinely curious what the weather in San Francisco is this week, and just checking my weather app would make for a boring blog post.

After my collection process, I had gathered weather data for San Francisco, Toronto, New York City and Stillwater. Here is a look at the key/value structure of my data:

{

"currently": {

"apparentTemperature": 70.97,

"cloudCover": 0.39,

"dewPoint": 45.56,

"humidity": 0.4,

"icon": "partly-cloudy-night",

"ozone": 310.66,

"precipIntensity": 0,

"precipProbability": 0,

"pressure": 1005.44,

"summary": "Partly Cloudy",

"temperature": 70.97,

"time": 1528919028,

"uvIndex": 0,

"visibility": 10,

"windBearing": 141,

"windGust": 6.28,

"windSpeed": 1.82

},

"latitude": 43.6532,

"longitude": 79.3832

}

Now, let’s see how we can pull this into Snowflake and start building.

JSON Import

To start working with JSON in Snowflake, the first step I tend to take is creating an External Snowflake Stage. Due to Snowflake being completely cloud-based, importing data into tables requires a slightly different process, which is where the stage comes into play. The stage is almost a reference point to the S3 bucket our data lives in, which allows Snowflake to run standard COPY INTO commands. After I uploaded my JSON into an S3 bucket, I created my stage like this:

CREATE OR REPLACE STAGE WeatherStage

URL = 's3://*Name of S3 Bucket*/'

CREDENTIALS = (AWS_KEY_ID = '*Your Key ID*' AWS_SECRET_KEY = '*Your Secret Key*');

Snowflake will also give you the ability to create the stage with their GUI. I tend to prefer the SQL code for simplicity. I have run into a few situations where a GUI-created stage disappears randomly. At least with the code, if that happens, you can execute it again.

Now that my stage is ready, I am going to go ahead and build a table to store the JSON data. Snowflake has a data type for Variant, which is solely for storing semi-structured data. This Variant data type means that I can upload my JSON file for each city as a row to my table. When I attempt to return a count of rows, my query will count the files that were imported from my external stage. In the table, you can also include additional columns that will store data from structured data sources alongside your JSON. For this example, I kept it simple and made a single variant column table named “WeatherJSON” and loaded data from my WeatherStage with this command:

COPY INTO WeatherJSON FROM @WeatherStage FILE_FORMAT=(TYPE='json' STRIP_OUTER_ARRAY=true);

This command calls my stage using an “@” as an indicator, specifies the file format and lets Snowflake know to remove the curly braces that encompass the entire JSON file. This stripping of the outer array will vary depending on your source, but for the weather data, it was required to work with the data.

JSON It

Now that we have our data loaded into our Variant Snowflake table, we can start playing with the fun stuff and turn this gibberish into a relational view that we can run queries against. My first piece of advice: If you don’t know every value your JSON file collects off the top of your head, the snippet below is going to keep you from pulling your hair out … especially if you are working with a JSON file that contains thousands of attributes, like the kind a Weather API provides you:

select * from (select Field Name as json_field from Table_Name) v, table(flatten(input=>v.json_field, recursive=>true)) f where f.key is not null;

This query will provide you with the path to extract every key’s value. This can be extremely important because due to JSON’s structure, if you call the path incorrectly when trying to build your view, you will receive NULL values.

Now that you have a better idea of what is included in your data and how to access it, we can begin building a view that casts these JSON values to their respective data types. The standard format for this is as follows:

VariantFieldName:Key::DataType Title

Given the example of weather data, let’s say that I want to create a sample view from my JSON files. I will select the columns I want and cast them to a data type in my view like this:

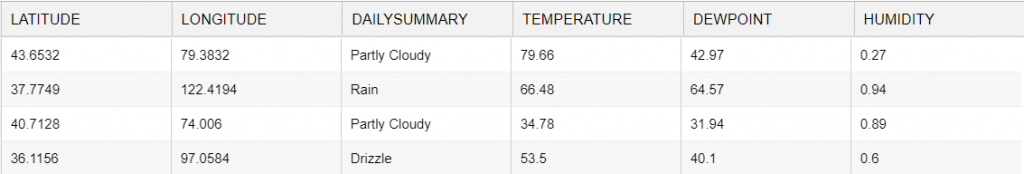

CREATE OR REPLACE VIEW vw_weather AS( SELECT jsondata:latitude::float Latitude, jsondata:longitude::float Longitude, Jsondata:currently.summary::string DailySummary, jsondata:currently.temperature::float Temperature, jsondata:currently.dewPoint::float DewPoint, jsondata:currently.humidity::float Humidity FROM weatherjson);

Casting each column from JSON to a standard SQL data type couldn’t be easier. Using the JSON path, I can build a view and begin querying against it as a standard relational table in a single step.

Some Final Thoughts

This is incredible technology. The standard protocols for working with JSON up to this point have included building new tables and transforming the data with complex SQL statements, sometimes using entirely different tools from your database. All of this effort in the past was required to even get the data to a usable state.

This ability to deliver a better solution in a way that uses basic SQL syntax to work with JSON is really fun. Partner this with any one of the additional features Snowflake offers and you will quickly begin to see that the value proposition is phenomenal for people who want their database to fulfill one simple task that is difficult to perform well: “Let me ask business questions.”

If you enjoyed this blog post, I invite you to follow the series! I am going to be putting new content about Snowflake out every one-two weeks. These posts will cover topics from concurrency testing all the way down to connecting Snowflake to an IDE of your choice. If you have any more questions about working with JSON in Snowflake, please feel free to reach out to me directly.