The year 2020 produced a mass migration of business processes, enterprise software and internal workloads to AWS in a way that was previously unseen. In the world of data, organizations relied on the flexibility of the cloud to run infrastructure, authentication and analytic workloads at scale across a new distributed workforce. Sharing information became paramount to the success of the business, and one thing we learned is this: we can do almost anything, anywhere, and AWS can make it easier to do so. We saw a large movement of analytic workloads to the cloud, so let’s break down the kinds of migrations we saw last year and why you should care.

Lifecycle Migration

Organizations everywhere rely on technology to run the business, and that pattern began with the on-premises data center. If you look at a physical data center, there are a handful of activities that must occur just to keep the lights on. Running workloads to support analytics in an on-prem environment is beholden to these requirements, and operating a data warehouse, Tableau Server and ETL platform becomes more complex.

In an on-premises data warehouse, the servers, operating system and software all have to be maintained to provide optimal performance. Physical hardware becomes obsolete. The same way the original iPhone doesn’t offer the same flexibility and performance as its latest and greatest successor, running a 10-year-old server can produce operational headaches and – in many situations – reach the end of its life. In 2020, we saw organizations going about their standard hardware refresh cycle completely differently, opting for a cloud-first strategy to streamline and simplify day-to-day operations.

In the cloud, the traditional hardware refresh cycle is handled for you, and in many cases, the use of managed services can even take the software/operating system level maintenance off of your plate. We see this frequently with organizations shifting their transactional databases into tools like Amazon RDS. Tools built on AWS like Snowflake, Matillion and Fivetran allow vendors or partners to own software/hardware maintenance, enabling organizations to focus on innovating in their data practice instead of fighting to just keep the lights on.

The Physical Bottleneck

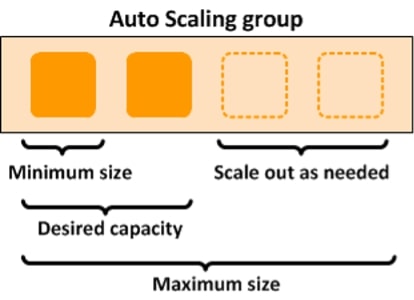

Above: AWS Auto Scaling

Migrations to the cloud often come with a different goal in mind: scale. Offering increased performance across spiky traffic to an organization is one of the hard problems to solve outside of the public cloud. Consistently, we refer to scaling issues as the “Monday morning traffic bump”. Tools like Snowflake have improved the way organizations scale for spiky analytic workloads, but without the power of the cloud behind these services, scaling might look very different.

The cloud offers an almost infinite amount of compute power available at a moment’s notice – imagine scaling a data platform across dozens of business units with nothing more than a mouse click. AWS offers the ability to scale elastically, just in time for your peak demand, to avoid frustration and confusion around why the new data warehouse doesn’t work.

In the world of Tableau Server, this scalability allows us to use AWS to increase the size of a server with a mouse click instead of a hardware purchase. The flexibility to provide the right resources to scale with adoption of an application allows us to try things on smaller instance sizes to confirm they work before committing big dollars to projects that haven’t been proven yet.

Innovation in the Cloud

The cloud also unlocks potential opportunities that would previously be extremely difficult in an on-premises scenario. Processing and visualizing terabytes of data in seconds is a daily requirement in the modern enterprise. To do this, oftentimes folks make the decision to place their central data platform on Snowflake in AWS, and from there, they are tasked with getting their data in and out with data pipelines.

Tools like Matillion have emerged that simplify this process, complementing the cloud investment organizations make on their reporting engine by simplifying the process of building and running data pipelines to populate the data platform. One common theme we see is that organizations are increasingly reliant on tools that are generating data in the cloud. Whether it’s Salesforce, advertising platforms like Google ads or project management software like Jira, these tools are generating vast amounts of data about how an organization is operating, and the idea of bringing that data on-prem and then shipping it back to the cloud is silly.

The end of the road for data should never be just a lake or warehouse (or lakehouse?) but needs to drive better decision-making for leaders and operators within your organization. Our recommendation for a best-of-breed tool to quickly turn data into insights is Tableau. Throughout this article, I have referenced Tableau Server in the cloud. Tableau Server is the distribution mechanism for sharing curated datasets and dashboards securely and at scale.

An AWS deployment of Tableau Server means you can benefit from some of the integrations Tableau has developed with some of its core functionality to run more efficiently on AWS services such as RDS, EFS and KMS. Tableau Server on AWS allows you to take advantage of a more performant and scalable architecture than what is available with on-premises deployments.

Extending your corporate identity provider to the cloud reduces friction for end users as they access your growing suite of cloud-based tools. Okta is our choice for best-in-class cloud identity providers, offering both a bridge from on-premises Active Directory to cloud identity with Universal Directory, as well as Okta OneApp for authenticating external users like customers and partners.

Tying It All Together

Creating consistency in the underlying infrastructure is really the beauty of AWS. Each tool and workload migration scenario I described is not proprietary to AWS, and migrating workloads doesn’t pigeonhole you into an ecosystem you won’t be able to escape from. AWS provides the flexibility to power the best tool for each specific task in your reporting stack on consistent hardware in a way that allows you to select fully managed tools. In the event a fully managed tool isn’t available, you can complement other pieces of your stack with building blocks to craft the perfect environment for your organization’s needs.

If you’re interested in how your organization can start positioning your workloads in the cloud, please feel free to reach out to our team of experts. We would love to chat all things Tableau, Snowflake, Matillion or Fivetran to figure out what makes the most sense for your evolving analytics requirements.