Recently, Snowflake released external access integrations into general availability. This allows Snowflake admins to create secure tunnels for Snowflake to communicate with the outside world, and can therefore communicate with external APIs to complete all manner of tasks. For example – external access could be used for any of the following, among many others:

- Send alerts to Teams webhooks so that the alerts can be presented in a Teams channel.

- Send alerts to Slack webhooks so that the alerts can be presented in a Slack channel.

- Communicate with a Matillion ETL API to trigger jobs.

- Communicate with the Matillion DPC API to trigger pipelines.

- Communicate with a Tableau Server/Cloud API to trigger datasource extract refreshes (this post).

- Communicate with any other external API for any other reason, the opportunities are limitless!

This specific article focuses on how to communicate with the REST API for a Tableau Server/Cloud deployment to refresh extracts in a published datasource/workbook, however this series will include the other examples above as well. With each new article, the entry above will be converted to a link.

How Does External Access in Snowflake Work?

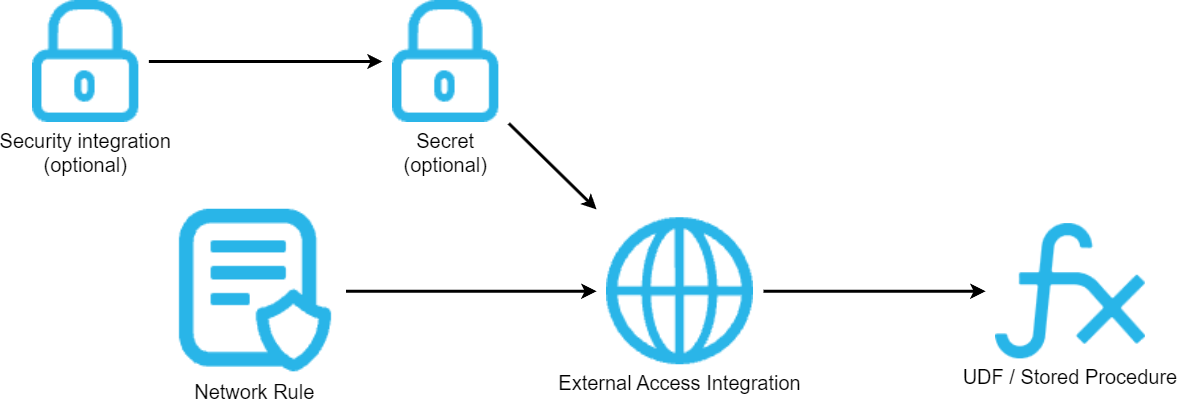

Before we dive into the specific example, let’s cover the architecture involved for external access in Snowflake. There are four main components:

- A network rule that controls where traffic is allowed to travel to..

- An external access integration that allows Snowflake objects to leverage the network rule

- An optional security component to facilitate authentication. This usually leverages a Snowflake Secret object that can either securely store information such as a username and password, or make use of a related Security Integration object that facilitates OAuth authentication with an API endpoint.

- A Snowflake object such as a stored procedure or a user defined function (UDF) that leverages the external access integration.

This is demonstrated in the following diagram:

How to Configure an Outbound Network Rule

In short, network rules are database objects that store information on a type of network traffic for Snowflake. This traffic can be one of two categories:

- Ingress – Traffic from outside of Snowflake that is coming in to Snowflake

- Egress – Traffic that is leaving Snowflake and travelling outside

This article specifically focuses on the egress category, and how we can leverage this to enable Snowflake to communicate with endpoints outside of Snowflake’s network. Note that in Snowflake’s terminology, the category (ingress/egress) is referred to as the “mode,” and this terminology will be used for the rest of this article.

Example Network Rule for External Access

Here is a simple example for creating an outbound/egress network rule:

create network rule "MY_OUTBOUND_NETWORK_RULE"

type = HOST_PORT -- Always use this value for external access integrations

mode = EGRESS -- Always use this value for external access integrations

value_list = ('?????') -- Populate with host(s) of the target endpoint(s), such as matillion.customer.com, host.online.tableau.com, customer.webhook.office.com, eu1.api.matillion.com, etc

comment = 'Allows traffic to be sent to the host(s) in the value_list'

;

It is important to note that Snowflake stores network rules as database objects, meaning they sit inside schemas in databases. This is different to network policies, which are account-level objects. This is easy to get confused, which is why it has been mentioned here.

Specific Network Rule Example

The following network rule could be leveraged to enable outbound/egress traffic with Tableau Server/Cloud API:

create network rule "EXTERNAL_ACCESS"."NETWORK_RULES"."EGRESS__TABLEAU_API"

type = HOST_PORT

mode = EGRESS

value_list = ('?????') -- Populate with host of the target Tableau Server/Cloud instance, such as tableau.company.com for a server or 10ax.online.tableau.com for Tableau Cloud

comment = 'Allows traffic to be sent to the API for the Tableau Server deployment'

;

How To Set up an External Access Integration

In short, external access integrations are account-level objects that act as the bridge between egress network rules and any other Snowflake objects (stored procedures/UDFs).

Here is a simple example for creating an external access integration that leverages an outbound/egress network rule:

create external access integration "MY_EXTERNAL_ACCESS_INTEGRATION"

allowed_network_rules = ("MY_DATABASE"."MY_SCHEMA"."MY_OUTBOUND_NETWORK_RULE")

enabled = FALSE

comment = 'External access integration to support traffic sent to the <endpoint(s) enabled by the network rule(s)>'

;

How To Attach Optional Security Components – Secrets for a Tableau API

Depending on your required target, you may have a need for additional security components to authenticate your requests with the destination. For example, the destination may expect authentication via a username/password combination that has been converted into a bearer token, or it may leverage OAuth and expect a combination of client ID and secret ID. Of course, these are just two examples and there are other possibilities for more complex use cases.

In the case of a Tableau API, a peronal access token (PAT) is the simplest way to authenticate. To support this, two secrets are required in Snowflake, one to store the name of the peronal access token and one to store the value. This is required as PAT values take a form that is not accepted by a standard password-type secret in Snowflake, and thus a pair of generic-string-type secrets are used instead.

My personal recommendation is to create your external access integration before creating any additional security components, then apply them using alter commands.

To start, create the external access integration, using the network rule that was defined in the specific example earlier in this article. As the integration is still a work in progress, set “enabled” to FALSE.

create external access integration "EAI__TABLEAU_API"

allowed_network_rules = ("EXTERNAL_ACCESS"."NETWORK_RULES"."EGRESS__TABLEAU_API")

enabled = FALSE

comment = 'External access integration to support traffic sent to the API for the Tableau Server deployment'

;

Required Permissions in Tableau Server/Cloud

Before creating the personal access token (PAT) as detailed in the next step, a service principal user should be created in Tableau Server/Cloud with the appropriate access to any target published workbooks/datasources. Specifically, for any user to be able to refresh a data extract in a published workbook/datasource in Tableau Server/Cloud, that user must have one of the following levels of access:

- Ownership of the published workbook/datasource itself.

- Project Leader access for the project that contains the published workbook/datasource.

- Site Administrator access, which grants full control over the entire Tableau Server/Cloud site.

How To Create a Personal Access Token (PAT) in Tableau Server/Cloud

Creating a PAT in Tableau Server/Cloud can be achieved in a handful of clicks:

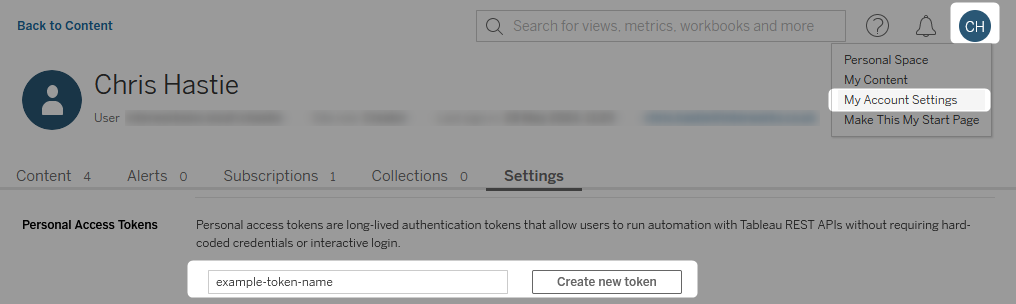

- After logging in to your Tableau Server/Cloud site, select your profile icon in the upper right corner and select “My Account Settings.”

- Scroll down to the “Personal Access Tokens” area, enter a name for your new token and select “Create new token:”

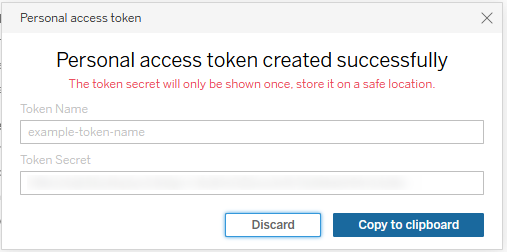

- A pop-up will appear allowing you to copy the new access token to your clipboard. This is only available once, so be sure to leverage the value straight away, either by inserting it directly into the destination by creating the secret in Snowflake, or by noting it down temporarily in another secure location whilst you work:

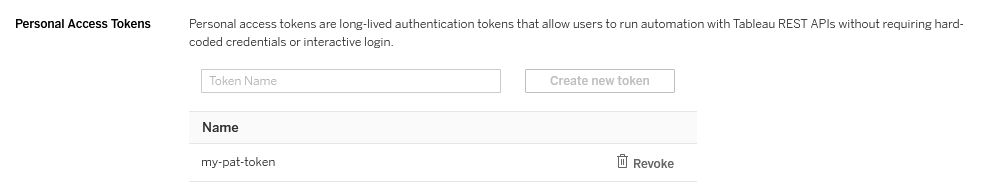

- When complete, your token name should then be listed in the “Personal Access Tokens” area. This can be revoked if required:

How to Configure Secrets To Contain a Generic Strings

Now that the personal access token (PAT) has been created, we can place the credentials inside secrets in Snowflake.

-- Secret for token name create secret "EXTERNAL_ACCESS"."SECRETS"."CREDS__TABLEAU_API__ACCESS_TOKEN_NAME" type = GENERIC_STRING secret_string = 'my-pat-token' comment = 'Name of the Personal Access Token (PAT) for the Tableau Server API' ; -- Secret for token value create secret "EXTERNAL_ACCESS"."SECRETS"."CREDS__TABLEAU_API__ACCESS_TOKEN_VALUE" type = GENERIC_STRING secret_string = '?????' -- Enter the value of the token here comment = 'Personal Access Token (PAT) for the Tableau Server API' ;

Attach the Optional Security Components to the External Access Integration

Now that the secrets have been configured, attaching them to the external access integration is achieved with an alter statement. This is also a good opportunity to enable the integration.

alter external access integration "EAI__TABLEAU_API"

set allowed_authentication_secrets = (

"EXTERNAL_ACCESS"."SECRETS"."CREDS__TABLEAU_API__ACCESS_TOKEN_NAME"

, "EXTERNAL_ACCESS"."SECRETS"."CREDS__TABLEAU_API__ACCESS_TOKEN_VALUE"

)

, enabled = TRUE

;

How To Trigger a Resource Extract Refresh in Tableau Server/Cloud

Now that we have discussed and configured the underlying architecture behind our process, we can move on to our true goal, which is to create a stored procedure in Snowflake that can automatically trigger an extract refresh for a published workbook/datasource in Tableau Server/Cloud.

Creating a Mapping Table for Resources and Their Backend IDs

Whilst this step is not necessary and you could develop the process without it, leveraging a mapping table can make the process much simpler. The idea here is to create a table that maps combinations of resource type (workbook or datasource), site ID, project name and resource name to backend resource IDs. That way, if you have resources that you wish to refresh under different scenarios, you can have a single stored procedure that determines the target resource based on a set of inputs. The benefit of the mapping table specifically is that the stored procedure can then convert the inputs into a backend resource ID, removing the effort required for the end user to retrieve the resource ID each time.

Here is some example code for creating such a mapping table:

Populating the Mapping Table

Populating the mapping table is relatively simple once you know the process, but can be confusing at first. The main component we need is the resource ID, which is stored in Tableau’s backend and not exposed in any of the friendly parts of the user interface. Instead, the resource must be obtained from the backend.

The backend ID for a resource can change for a number of reasons, so it is vital to keep this table updated.

The following options are available for identifying the backend ID for a resource:

- Leverage the GraphiQL interface to directly retrieve the resource based on an ID that is visible from the URL in the UI.

- This is the option I would recommend for smaller/simpler scenarios where it is not difficult to maintain the table.

- Leverage the REST API, either directly or through a library such as the tableauserverclient for Python, to execute a series of queries to identify the resource.

- I would not recommend this option as you may struggle with duplicates multiple resources exist with the same name in different projects, especially if the projects are children that also have the same name but sit inside different parent projects.

- Query the backend postgreSQL repository directly to identify the IDs.

- Not an option for Tableau Cloud as the postgreSQL repository is not exposed.

- I would not recommend this option as it is more complex than step option (1).

- Develop an ELT process to replicate the backend postgreSQL repository into Snowflake regularly, then remove the mapping table and modify the process to leverage the actual backend tables.

- Not an option for Tableau Cloud as the postgreSQL repository is not exposed.

- This is the option I would recommend for larger Tableau Server scenarios as you can ensure you are always using the most recent ID for each resource. It requires more development time for initial setup, but will then automatically update as needed without further manual intervention.

- Develop an ELT process to regularly query the GraphiQL backend regularly.

- This is similar to option (4) but can work on both Tableau Server and Tableau Cloud.

- This is the option I would recommend for larger Tableau Cloud scenarios.

To keep things simple for this article, only the first option is covered. Fortunately, it is a quick and simple one to use.

Enabling Metadata Services for Tableau Server

Don’t worry, you do not need to have purchased Tableau’s Data Management add-on for this to work, as that add-on enables the frontend support for the metadata services. The backend is already there behind the scenes.

If you are using Tableau Cloud then this step can be skipped as the metadata services will already be enabled.

For Tableau Server, you will need one of your administrators to access the backend of the Tableau Server and execute this command to enable the services:

tsm maintenance metadata-services enable

This will require a restart of your Tableau Server.

Retrieve the Backend ID for a Published Workbook or Datasource Using the GraphiQL Interface

To retrieve the backend ID for a published workbook/datasource, start by navigating to it in the Tableau Server/Cloud user interface and looking at the URL.

Depending on if it is a workbook or a datasource, it should be in one of the following formats:

https://<server_url>/#/site/<site_id>/workbooks/<vizportal_url_id>/views https://<server_url>/#/site/<site_id>/datasources/<vizportal_url_id>/askData

For example, the URL could be the one of the following:

https://tableau.company.com/#/site/MySite/workbooks/12345/views https://tableau.company.com/#/site/MySite/datasources/6789/askData

The important detail we are looking for here is that “vizportal_url_id” near the end of the URL. This is the unique identifier for the resource in Tableau’s frontend.

The next step is to use this to retrieve the backend ID by leveraging the metadata services through the GraphiQL interface. This interface can be accessed using the following URL:

https://<instance_url>/metadata/graphiql/

For example, this URL could be the following:

https://tableau.company.com/metadata/graphiql/

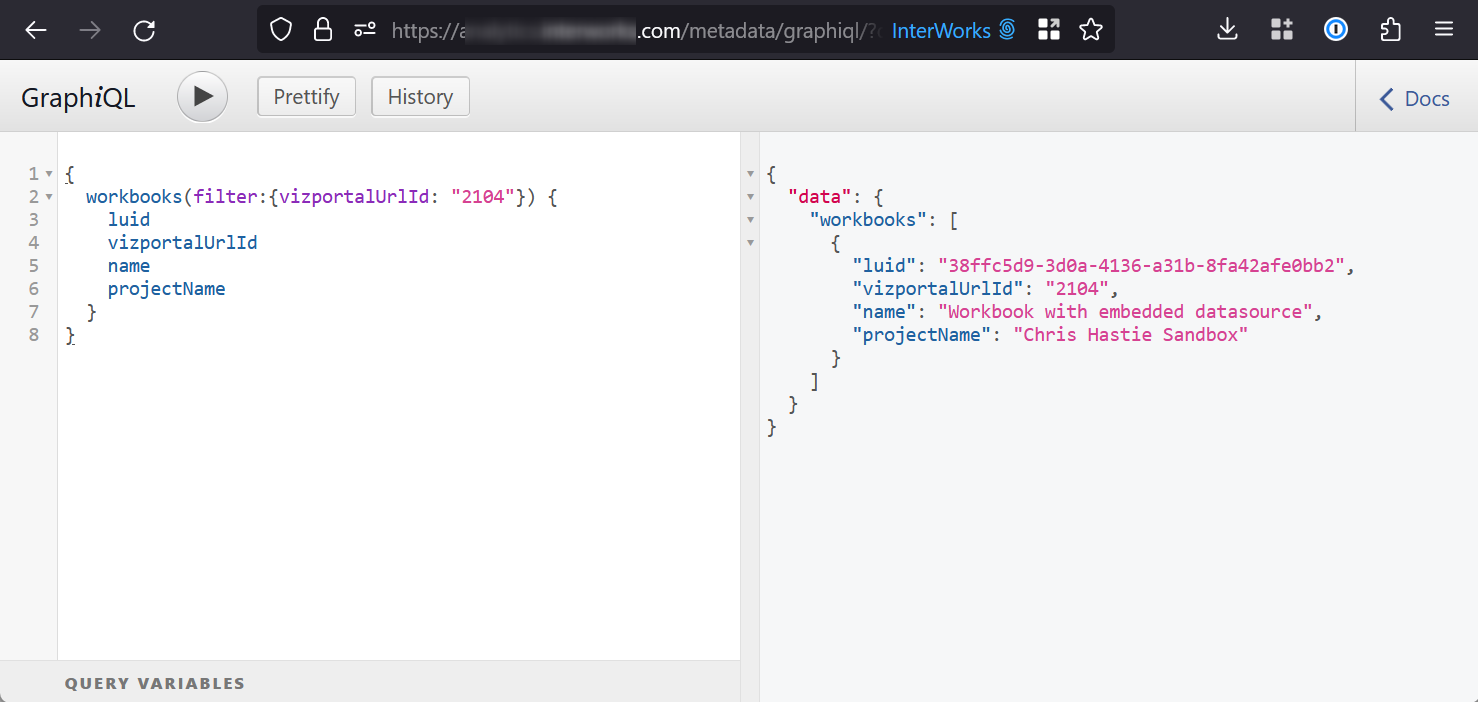

Once you are within this area, the following query can be used to retrieve the backend ID, referred to as the “luid,” for a workbook:

{

workbooks(filter:{vizportalUrlId: "<viz_portal_url_id>"}) {

luid

vizportalUrlId

name

projectName

}

}

Similarly, the following query can be used to retrieve the backend ID, referred to as the “luid”, for a datasource:

{

publishedDatasources(filter:{vizportalUrlId: "<viz_portal_url_id>"}) {

luid

vizportalUrlId

name

projectName

}

}

The following screenshot demonstrates an example of this for a workbook:

From this output, the “luid” is the backend ID that is required when populating mapping table. This is the unique ID for the resource that is leveraged by the Tableau Server/Cloud API.

Creating a Stored Procedure To Trigger a Resource Refresh

Finally, we come to the main meat of the article. First, I will provide the full code for the stored procedure. I will then explain some key areas.

This article does not explain Python stored procedures in general. If you wish to know more about these, the following article is recommended: A Definitive Guide to Python Stored Procedures in the Snowflake UI. This is part of our Snowflake with Python series.

The full procedure definition is as follows:

-- Create stored procedure

create or replace procedure "EXTERNAL_ACCESS"."PROCEDURES"."API__TABLEAU__REFRESH_RESOURCE"(

RESOURCE_TYPE varchar

, RESOURCE_NAME varchar

, PROJECT_NAME varchar

, SITE_ID varchar

, CONNECTION_STRING varchar default '?????' -- Populate with host of the target Tableau Server/Cloud instance, such as tableau.company.com for a server or 10ax.online.tableau.com for Tableau Cloud

)

copy grants

returns varchar

language python

runtime_version = 3.10

handler = 'main'

external_access_integrations = ("EAI__TABLEAU_API")

secrets = (

'pat_name' = "EXTERNAL_ACCESS"."SECRETS"."CREDS__TABLEAU_API__ACCESS_TOKEN_NAME"

, 'pat_value' = "EXTERNAL_ACCESS"."SECRETS"."CREDS__TABLEAU_API__ACCESS_TOKEN_VALUE"

)

packages = ('snowflake-snowpark-python', 'tableauserverclient')

as

$$

## Import module for inbound Snowflake session

from snowflake.snowpark import Session as snowparkSession

from snowflake.snowpark.functions import col, lit

## Imports

import _snowflake

import tableauserverclient as TSC

## Define main function

## Put at top of SProc to avoid Snowflake linting bug

## if converted to a Snowpark for Python worksheet

def main(

snowpark_session: snowparkSession

, resource_type: str

, resource_name: str

, project_name: str

, site_id: str

, connection_string: str

):

### Validate inputs

if resource_type is None \

or len(resource_type) == 0 \

or resource_type not in ["workbook", "datasource"] \

or resource_name is None \

or len(resource_name) == 0 \

or project_name is None \

or len(project_name) == 0 \

or site_id is None \

or len(site_id) == 0 \

:

return "Missing inputs. Must provide all of resource_type, resource_name, project_name, site_id. Also, resource_type must be either 'workbook' or 'datasource'"

### Retrieve the resource id

resource_id = retrieve_resource_id(

snowpark_session = snowpark_session

, resource_type = resource_type

, resource_name = resource_name

, project_name = project_name

, site_id = site_id

)

if resource_id is None:

return "Resource not in mapping table"

### Trigger the refresh of the resource

try:

trigger_resource_refresh(

resource_type = resource_type

, resource_id = resource_id

, site_id = site_id

, connection_string = connection_string

)

return "Resource refresh complete"

except Exception as e:

return f"Error triggering resource refresh:\n\n{str(e)}"

### Define the function to retrieve the resource ID

def retrieve_resource_id(

snowpark_session: snowparkSession

, resource_type: str

, resource_name: str

, project_name: str

, site_id: str

):

### Query the RESOURCES table, filtered by other inputs

sf_df_resource_id = snowpark_session.table('"EXTERNAL_ACCESS"."MAPPING_TABLES"."API__TABLEAU__RESOURCES"') \

.filter(

(col('"RESOURCE_TYPE"') == lit(resource_type))

& (col('"RESOURCE_NAME"') == lit(resource_name))

& (col('"PROJECT_NAME"') == lit(project_name))

& (col('"SITE_ID"') == lit(site_id))

) \

.select(col('"RESOURCE_ID"')) \

.collect()

### If a value is found, return it.

### Otherwise, return None

if sf_df_resource_id is not None \

and len(sf_df_resource_id) > 0 \

:

resource_id = sf_df_resource_id[0]["RESOURCE_ID"]

else:

resource_id = None

return resource_id

### Define the function to trigger the refresh of a resource

def trigger_resource_refresh(

resource_type: str

, resource_id: str

, site_id: str

, connection_string: str

):

### Retrieve authentication object

access_token_name = _snowflake.get_generic_secret_string("pat_name")

access_token_value = _snowflake.get_generic_secret_string("pat_value")

tableau_auth = TSC.PersonalAccessTokenAuth(

access_token_name

, access_token_value

, site_id = site_id

)

### Retrieve Tableau server object

tableau_server_url = f"https://{connection_string}"

tableau_server = TSC.Server(tableau_server_url, use_server_version=True)

### Execute the refresh statement

with tableau_server.auth.sign_in(tableau_auth):

if resource_type == "workbook":

# Get the workbook by its Id to make sure it exists

resource = tableau_server.workbooks.get_by_id(resource_id)

# trigger the refresh, you'll get a job id back which can be used to poll for when the refresh is done

job_id = tableau_server.workbooks.refresh(resource)

else:

# Get the datasource by its Id to make sure it exists

resource = tableau_server.datasources.get_by_id(resource_id)

# trigger the refresh, you'll get a job id back which can be used to poll for when the refresh is done

job_id = tableau_server.datasources.refresh(resource)

# `wait_for_job` will throw if the job isn't executed successfully

job = tableau_server.jobs.wait_for_job(job_id)

return

$$

;

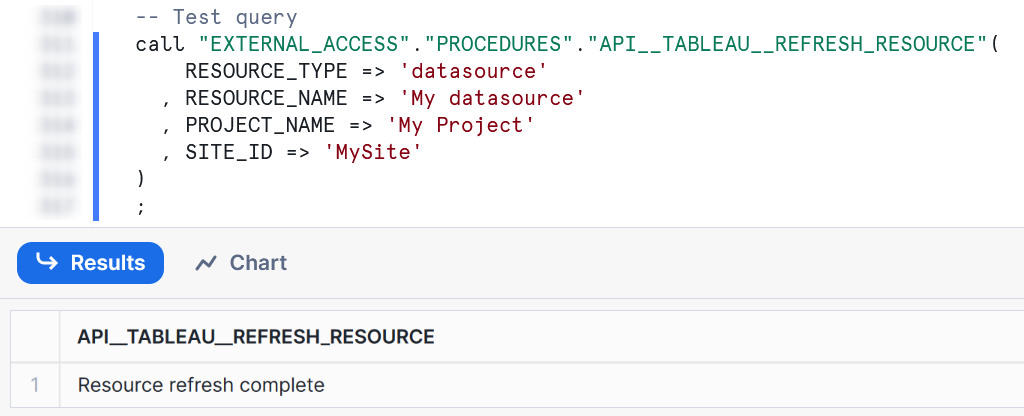

-- Test query

call "EXTERNAL_ACCESS"."PROCEDURES"."API__TABLEAU__REFRESH_RESOURCE"(

RESOURCE_TYPE => 'datasource'

, RESOURCE_NAME => 'My datasource'

, PROJECT_NAME => 'My Project'

, SITE_ID => 'MySite'

)

;

-- Another test query

call "EXTERNAL_ACCESS"."PROCEDURES"."API__TABLEAU__REFRESH_RESOURCE"(

RESOURCE_TYPE => 'workbook'

, RESOURCE_NAME => 'My workbook with embedded datasource(s)'

, PROJECT_NAME => 'My Project'

, SITE_ID => 'MySite'

)

;

The following lines are important here:

- Lines 14-18 enable the stored procedure to leverage the external access integration and accompanying secrets that facilitate authentication to Tableau.

- Line 19 ensures that the “tableauserverclient” Python package is available, which is then imported on line 29 and leveraged throughout the procedure. Fortunately, this is simple to ingest as it is already included in Anaconda’s Snowflake channel, which Snowflake leverages when executing Python.

- Lines 120-126 leverage the secrets to generate an authentication object for “tableauserverclient.” That authentication object is then leveraged on line 133 to authenticate to the Tableau Server/Cloud.

- Lines 81-109 define a function that leverages Snowpark for Python to query the mapping table and retrieve the backend resource ID. This is leveraged on lines 57-63.

- Lines 112-150 define a function triggers the resource refresh

- Lines 156-161 can be used to test the process for a datasource

- Lines 165-170 can be used to test the process for a workbook

This stored procedure provides the main structure to achieve our goals, but only uses some basic error handling. Depending on your purposes, it may be worth expanding this when deploying it to your own environment.

Testing the Stored Procedure

A successful test of the stored procedure should yield a simple “Resource refresh complete” response, which has been configured within the stored procedure:

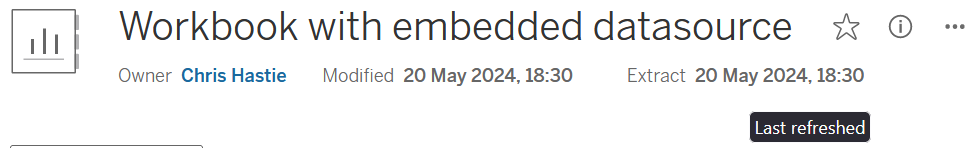

Once executed, you should see the last refresh timestamp update for the extract in Tableau Server/Cloud:

Wrap Up

Hopefully the information in this article provides you with all of the tools you need to programatically trigger datasource extract refreshes in Tableau. This stored procedure can now be made available to other users using Snowflake’s Role-Based Access Control (RBAC) and could be useful in multiple scenarios, including:

- Refreshing extracts at the end of scheduled pipelines

- Refreshing extracts as the result of a Snowflake Alert

- Refreshing extracts as part of a process that executes when new data is detected in a stream