SHP Files in Tableau

Shape files (.shp) allow us to encode geospatial information like countries, continents or more specific custom regions specific to our use cases. These work similarly to dot-to-dot puzzles. One file stores a series of shapes, each with a series of points (or vertices) featuring a coordinate. The order of the vertices allows the shape to be interpreted by your mapping tool of choice: Tableau for instance.

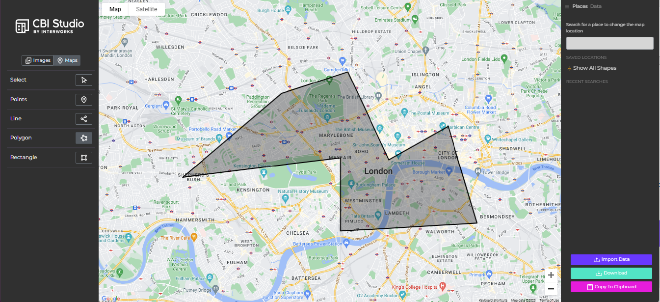

You can create a GeoJSON file simply using InterWorks’ free CBI Studio tool to demonstrate this structure.

Tableau supports connections to multiple types of spatial files from MapInfo tables to Esri File Geodatabases. The most common, however, is the .shp (“shape”) file.

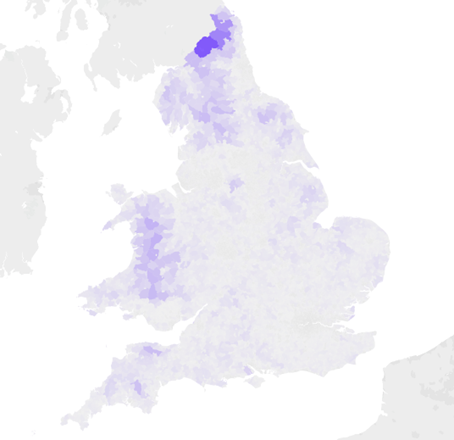

Recently, I worked on a Tableau and Curator demo using publicly available crime data from data.police.uk. Naturally, I wanted to show crime statistics to the prospective client, leveraging Tableau’s fantastic mapping functionality.

The database features a Lower Layer Super Output Area (LSOA) code, allowing us to roll-up types of crime to specific areas within the England. These divide the UK into around 35,000 areas by population. The shape files for these areas are available on several public websites including UK Data Service.

The problem: The shapes are very accurate.

“Why is that a problem? J’adore accuracy! The shapes will be perfect even when I zoom in on my house!”

Often, we face difficult compromises for great solutions.

Spatial Objects in Snowflake

Snowflake supports spatial data in multiple formats. For Tableau ingestion, we need to use the GEOGRAPHY data type, storing latitudes and longitudes in a GeoJSON array that Tableau can interpret. When ingesting .shp files into Snowflake, things can get complicated so read this blog by my colleague Chaitanya Joshi for more information.

But why use Snowflake over ingesting local .shp files into Tableau?

Lots of reasons. Firstly, why use Snowflake – or any data warehouse – at all?

- Centralisation: One version of the truth. Having business users connect to local files, or files on a shared drive/SaaS content management (like Box or SharePoint), can become an issue at scale, with data sources being hard to find and changeable by anyone easily.

- Governance and Stewardship: SQL allows us a fully flexible language to manage the cloud data warehouse at scale. We can control permissions to staging, transformation and production databases to expose only the perfectly curated data to end users for analysis.

- Performance and Scale: Snowflake offers best-of-breed performance by separating compute and storage, scaling compute resources seamlessly to provide consistently high performance.

Secondly, added treats! With Snowflake we get a fully featured engine for manipulating and transforming our spatial data. Some of these are available to apply in Tableau, but spatial functions can be costly to apply at runtime, so doing these ahead of time can be advantageous for performance. There are also additional functions that aren’t supported in Tableau. One such function is ST_SIMPLIFY, which typifies the benefit of a powerful data platform like Snowflake.

ST_SIMPLIFY

Remember polygons are dot-to-dot puzzles? These dots are stored in arrays within each cell. The visualisation tool unpacks these arrays and plots the coordinate pairs, in order, at the right scale, on a background map.

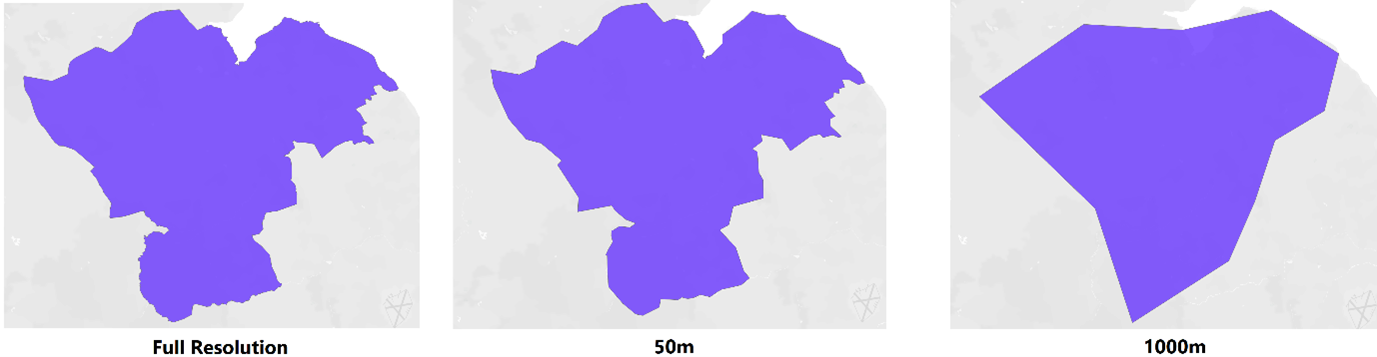

Using this function, we feed in the spatial object and a tolerance. We have a field – [Country] for example – containing the array defining each country around the world. These internationally recognised definitions can be huge to avoid regional disputes, perhaps accurate to within a meter and therefore containing 100k+ vertices within each array.

To simplify the shape to keep vertices that are only more than 20m apart, we write the following syntax in Snowflake:

ST_SIMPLIFY(Country, 20)

This removes vertices. The existing vertices in the array are the original vertices. It doesn’t average the locations to cater for this change. This will likely introduce more null values as you add more aggressive tolerance values. Some smaller shapes become invalid for a variety of reasons: self-intersections, having too few vertices, etc. We can tune this function to best fit our analytical requirements.

Summary

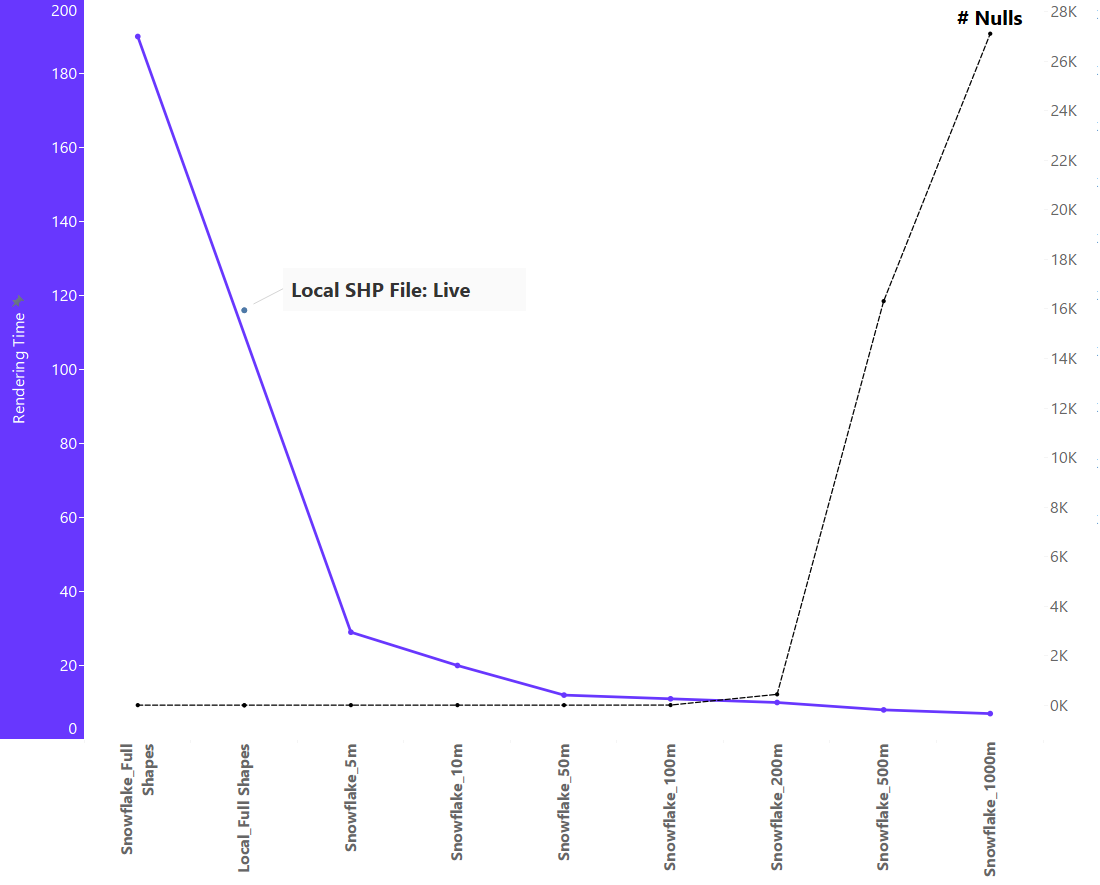

The purpose of using the ST_SIMPLIFY function is to optimise performance when querying shapes, reducing the number of vertices in the array stored in Snowflake and therefore reducing the size of the object and querying less data. This has a profound impact on performance as shown below – comparing various tolerances of the same field using Tableau.

Rendering time drops sharply – from 190s to 29s – by even applying a 5m tolerance. This 85% decrease will massively improve the experience for the user, and can be further optimised by extracting this smaller array into a local extract – as a .hyper – rendering in a second or two.

As we decrease the resolution further, we face additional compromises; mainly that shapes become visibly inaccurate (overly simplified) and null values increase drastically.

When working with spatial data, Snowflake is perfect for storage, transformation and tuning to cater for our varied functional requirements. For more information on how Snowflake can help your maps, or to dig deeper, get in touch!