What Is Data Virtualization?

Data virtualization is an approach to data integration that can be thought of as an alternative to the more common ETL/ELT + data warehouse based approach. With data virtualization, the source data is left in place and accessed on the fly as required by a client query. A data virtualization application creates an abstracted layer that brings together the different data sources and hides complexities associated with different data formats and storage locations.

It can be seen as a cheaper alternative to an ELT + Data warehouse approach. Its main disadvantage is that it only works on a small scale. It is not suitable for large data sets, large numbers of users or complex data transformations.

For more general info about the concept see this resource.

Who Are the Players?

A simple data virtualization solution can be implemented using a standard OLTP database such as Postgres or Oracle. There are dedicated data virtualization solutions available from Denodo, Tibco, Talend and others.

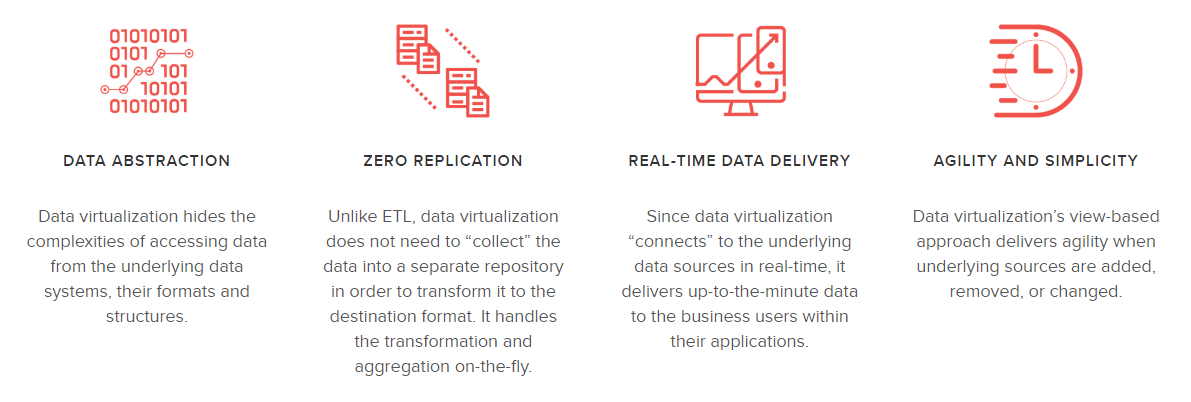

Denodo list the benefits of data virtualization characteristics in the diagram below:-

In response to the points they make:

Data Abstraction

Snowflake can abstract data, too. Inbuilt support for semi-structured data means we can used views to read things like JSON objects and return them as table-type objects that can then be accessed with simple SQL.

Zero Replication

This is the biggest weakness of data virtualization. Whenever data is queried, you’re relying on the compute power of whatever the source system happens to be. At peak times (such as near month end), the source system may be being heavily utilised for data entry, just as you also want to view a lot of dashboards. Modern dashboards can place heavy loads what can be quite old source systems (a single view of a single page of a Tableau dashboard might trigger 10-20 separate SQL queries). At best this means slow, uneven performance for the BI application. Worse, it can overload what could be mission critical systems like the ERP database at peak times. This risk and pain might still be worth it, if the only alternative were a large capital investment in hardware for a data warehouse. With snowflake of course you don’t need to do that. You just pay for the compute you need, as you need it.

Real-Time Data Delivery

This is a genuine strength of data virtualization. If the performance/reliability is not a concern, then querying the data sources directly will definitely give you up to minute data. The problem as per above is that this doesn’t scale. Snowflake by contrast can provide scalable near real-time access to streaming data using Snowpipe, or near real-time access to database data using a change data capture (CDC) tool. One of the leaders in CDC is HVR, purchased by Fivetran in 2023 and now integrated into their product.

Agility and Simplicity

A declarative, view based approach to business logic is definitely a good idea (I wrote a blog post on this subject). Having to do it all at query time to a source system means you’re quickly going to hit performance bottlenecks. In practice, this rules out anything but pretty basic transformations. When that happens, the need for complex transformations doesn’t go away. All it means is that the logic happens somewhere else. Often the output from data virtualization tools may just get downloaded as a data dump to a spreadsheet, so any notion of centralised control or single source of truth is lost.

By contrast, data stored in snowflake will already be accessible 10-100x faster than most OLTP data sources because of the hybrid/columnar structure of the snowflake data store. Where complex transformations are required, we can use materialized views or dynamic tables to further improve performance. For the most complex transformations that can’t easily be done in SQL, we can use stored procedures written in JavaScript or Python, or we can even use external calls to lambda functions. Snowflake can be simple, but it can also be advanced when you need it.