At InterWorks, we put an enormous amount of effort into translating complex technical concepts to make them easily understandable — and actionable — for the organisations we work with. It’s extraordinarily difficult to decide about a tool or vendor without understanding what it does and the value it may (or may not) have for your organisation. We pride ourselves on having helped hundreds of organisations confidently evaluate and implement complex technical solutions, safe in the knowledge that decisions have been made carefully, pragmatically and with expert support.

Fortunately for pre-sales and sales engineering teams across the globe, this is a never-ending job. As the data and analytics landscape continually evolves, new concepts appear, new acronyms must be de-mystified, and organisations must strive to stay ahead of it all if they want to make good decisions about tooling and technology. The topic for today’s de-mystification is the Data Catalog.

Where Might a Catalog Tool Be Useful?

Let’s imagine we work for a large organisation that’s been steadily and successfully implementing analytics to solve business problems, better informed decision-making and otherwise do good things with data. Consequently, a suite of tools has been introduced to handle everything that goes into making a data-driven organisation, from data warehousing and orchestration to data preparation, analysis, visualisation and AI (of course).

Along with this collection of tools comes a collection of objects, too. Databases, connections, platforms, pipelines, dashboards, reports — There’s a lot. As the organisation grows, it becomes more difficult to simply keep track of everything. Where do I go to answer data-driven questions? Which dashboard has the insights I need? Who’s responsible for the marketing data I need? Is this data even up to date?

What Do Catalog Tools Do?

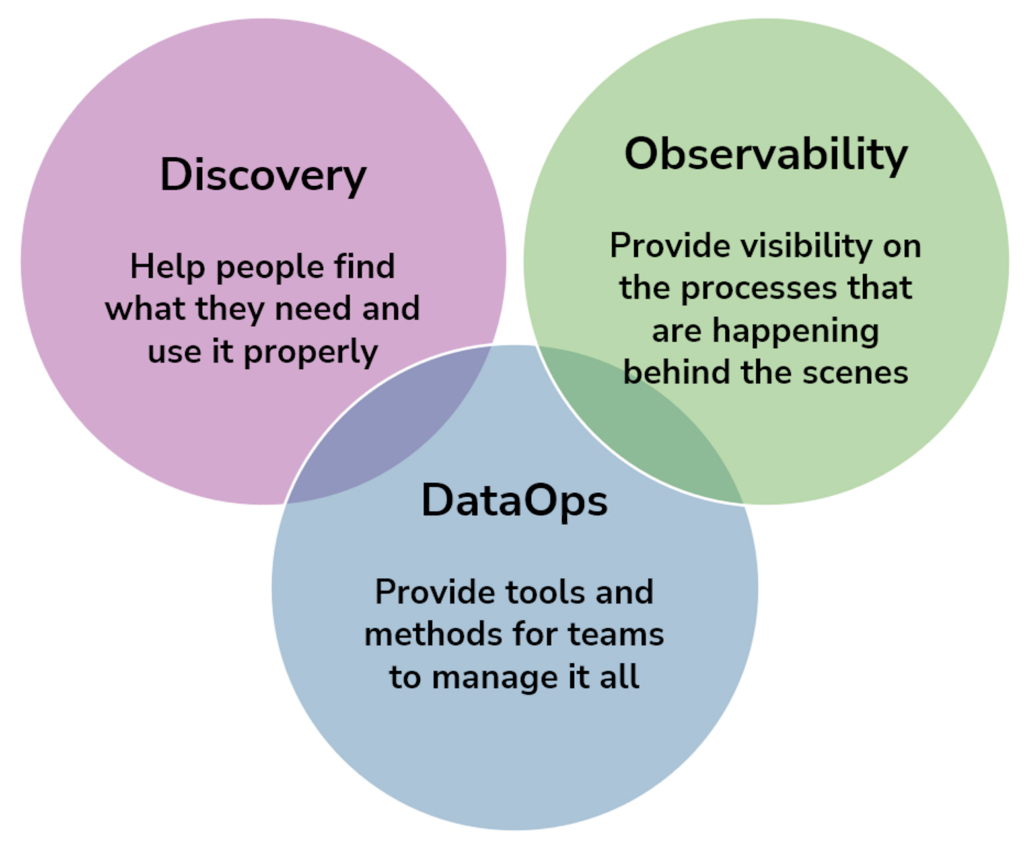

This is where catalog tools step in. In the simplest terms, catalog tools can connect to and interact with all these objects, collect data about them and present users with a powerful and intuitive interface to answer these kinds of questions. Vendors may have clever ways to describe this process like “activating your metadata” and “unlocking data team productivity,” but let’s try and explain it in plain language:

Above: A catalog tool provides a source of truth for all questions about your data ecosystem. Think of it as your inventory for all data assets, purposely designed to help individuals find, understand, and maximise the value of data.

Help People Find What They Need and Use It Properly

Vendors will call this “Discovery.” In simple terms, it means that by collecting and making metadata about objects and platforms (some vendors will refer to these as “Assets”), you can then present the user with a fast and intuitive way to search it – often based on Knowledge graph technology. Description, platform, owner, status, size, purpose – a huge range of metadata can be made available which allows users to answer questions like:

- I think I’ve found a problem with the way we’re calculating margin in the sales forecast table. Who’s the owner of this data source so I can talk to them about it?

- I’m using adjusted margin in my sales forecast reporting, but I need to know exactly how it’s calculated. What does this business definition say about it?

- It doesn’t look like the operational database has been updated recently. Is it still certified for use, or should I be looking elsewhere?

Provide Visibility on the Processes That Are Happening Behind the Scenes

Vendors will call this “Observability,” which is essentially a more sophisticated approach to monitoring the processes and behaviours going on behind the scenes of your catalog. Observability features will focus on answering questions like:

- I’m monitoring the data ingestion pipeline that takes data from our CRM system and moves into our analytics databases. Are pipelines running now, and have there been any issues in the last 24 hours I need to know about?

- My pipeline is important because it creates the margin measure in our sales forecast table. Has the margin calculation been executed correctly?

- We run lots of tests to ensure that the data that moves into our analytics database is accurate. Are there any indications that the expected volume of data hasn’t been moved from source to target?

Provide Tools and Methods for Teams To Manage It All

Often overlapping with observability, vendors might categorise these features as “Engineering” or “DataOps” – both essentially referring to the practice of managing the flows that generate the data that’s subsequently made available in the catalog.

- When data pipelines are running, I need to coordinate and monitor automated steps that happen to test data, update metadata and update users.

- Users create new metadata, relate objects to each other in different ways and add new data sources. I need to monitor our entire data environment in real time to understand when changes happen.

- Changing a single field or column in a table may impact hundreds of related objects like dashboards, data sources and pipelines. I need to understand the scale of the impact by inspecting the relationships (referred to as “data lineage”).

Meeting Users Where They Are

Many Catalog vendors understand that keeping users “in the flow”, i.e., being able to complete their work and communication without frequently switching between applications is important for productivity.

Embedded Components

Using patterns established in embedded analytics in general, Catalog tools often provide embeddable components that can be integrated in other analytics products like dashboards. These components can then deliver related info (warnings, certifications, generative AI content, etc.) about the product without the user needing to switch to the Catalog tool. Data.World’s “Hoots” feature allows you to surface this kind of contextual info where data consumers make decisions, directly in PowerBI or Tableau.

Integrations

Similarly, Catalog tools usually offer integration capabilities that provide users with governance or engineering-related info in communication tools like Slack. Users can be notified about changes to data assets, successful workflow completion and other changes within the Catalog, all without leaving the application. This can work both ways – with Atlan, it’s possible to send a message in Slack search for a particular business definition, with the answer being served up instantly by Atlan’s “Flow” integration feature.

Generative AI and LLMs in Catalog Tools

Arguably, Catalog tools gain more advantage from the application of generative AI than many other data and analytics products, because a huge amount of the value provided by Catalog tools is reliant on creating metadata. Some of this metadata can be automatically generated, but more subjective and human-readable information (what does this table contain, what does this dimension represent, etc.) needs to be added too – and that can take a long time.

Generative AI and LLMs can trivialise this process, and being able to largely automate the creation and management of this metadata using AI within the Catalog tool can significantly reduce the time-to-value. Some tools, like Atlan, extend this application to allow you to create entire data sources using AI. For example, you may need a representative dataset to train an ML model on – which AI can create for you, instantly.

Almost inevitably, the other use of generative AI you’ll see in Catalog tools is to create queries from natural language searches — either by prediction and suggestion to build a query using known metadata or synonyms, or with NLP-style prompts. Queries might be suggested to the user, input via search or constructed within a dedicated query interface, like Atlan’s “Insights” feature. This isn’t a core part of a Catalog tool in general, but it does blur the lines between Catalog tools and traditional BI tools, which might be of interest if you’re considering how the use of BI tools may intersect with any new Catalog tool your organisation procures.

In Conclusion

- Time-to-value for Catalog implementations can be much longer than Analytics or BI tools, but native generative AI features can make it significantly faster if you can plan effectively and provide experienced resources for the implementation.

- If you’re in the market for a Catalog tool, carefully consider the profile of your organization against the various use-cases that Catalog tools provide. Ideally, you’d want to be realising value across the entire breadth of Discovery, Observability and Engineering features, but it may be that a single feature could still have transformative value.

- Some Catalog products are “walled gardens” to the extent that they only comprehensively catalog objects from tools that vendor provides, like Tableau Data Management Catalog (which catalogs Tableau assets), or Google’s DataPlex Catalog (which catalogs GBQ objects and related GC services).

- Most Enterprise Catalog tools are agnostic to the extent that connectors are provided to capture data from thousands of databases (Snowflake, GBQ, Databricks), tools (DBT, Airtable, Airflow) and platforms (Tableau, Power BI, Slack).

- You’ll get more value from a catalog tool the more objects you have. If you’re a small organisation with a single database and basic reporting requirements, a data catalog is unlikely to make much impact. But if you’re a large organisation with a proliferation of tools and objects, catalog tools can be transformative.